RSyslog Windows Agent 4.1 Released

Adiscon is proud to announce the 4.1 release of MonitorWare Agent.

Rsyslog Windows Agent is now able to reload it’s configuration automatically if enabled (Which is done by the configuration client

automatically on first start). It is not necessary to restart the service manually anymore.

Performance enhancing options have been added into EventLog Monitor V1 and V2 and in File Monitor to delay writing the last record/fileposition back to disk. This can incease performance on machines with a very high eventlog or file load.

Detailed information can be found in the version history below.

Build-IDs: Service 4.1.0.166, Client 4.1.0.246

Features |

|

Bugfixes |

|

Version 4.1 is a free download. Customers with existing 3.x keys can contact our Sales department for upgrade prices. If you have a valid Upgrade Insurance ID, you can request a free new key by sending your Upgrade Insurance ID to sales@adiscon.com. Please note that the download enables the free 30-day trial version if used without a key – so you can right now go ahead and evaluate it.

Using rsyslog to Reindex/Migrate Elasticsearch data

Original post: Scalable and Flexible Elasticsearch Reindexing via rsyslog by @Sematext

This recipe is useful in a two scenarios:

- migrating data from one Elasticsearch cluster to another (e.g. when you’re upgrading from Elasticsearch 1.x to 2.x or later)

- reindexing data from one index to another in a cluster pre 2.3. For clusters on version 2.3 or later, you can use the Reindex API

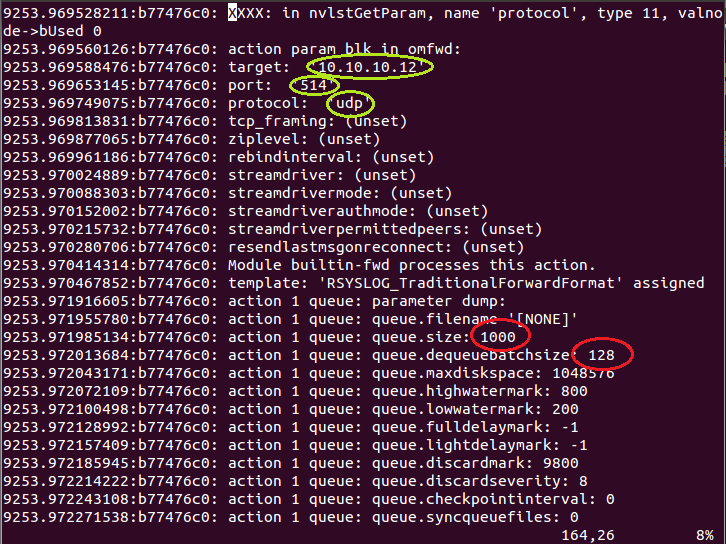

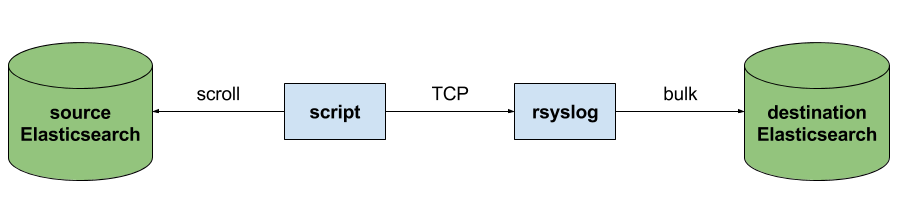

Back to the recipe, we used an external application to scroll through Elasticsearch documents in the source cluster and push them to rsyslog via TCP. Then we used rsyslog’s Elasticsearch output to push logs to the destination cluster. The overall flow would be:

This is an easy way to extend rsyslog, using whichever language you’re comfortable with, to support more inputs. Here, we piggyback on the TCP input. You can do a similar job with filters/parsers – you can find GeoIP implementations, for example – by piggybacking the mmexternal module, which uses stdout&stdin for communication. The same is possible for outputs, normally added via the omprog module: we did this to add a Solr output and one for SPM custom metrics.

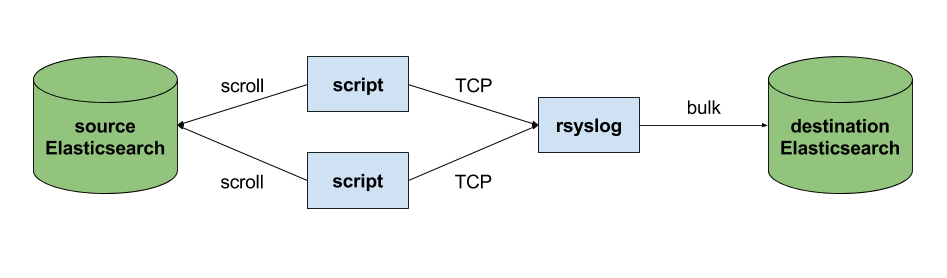

The custom script in question doesn’t have to be multi-threaded, you can simply spin up more of them, scrolling different indices. In this particular case, using two scripts gave us slightly better throughput, saturating the network:

Writing the custom script

Before starting to write the script, one needs to know how the messages sent to rsyslog would look like. To be able to index data, rsyslog will need an index name, a type name and optionally an ID. In this particular case, we were dealing with logs, so the ID wasn’t necessary.

With this in mind, I see a number of ways of sending data to rsyslog:

- one big JSON per line. One can use mmnormalize to parse that JSON, which then allows rsyslog do use values from within it as index name, type name, and so on

- for each line, begin with the bits of “extra data” (like index and type names) then put the JSON document that you want to reindex. Again, you can use mmnormalize to parse, but this time you can simply trust that the last thing is a JSON and send it to Elasticsearch directly, without the need to parse it

- if you only need to pass two variables (index and type name, in this case), you can piggyback on the vague spec of RFC3164 syslog and send something like

destination_index document_type:{"original": "document"}

This last option will parse the provided index name in the hostname variable, the type in syslogtag and the original document in msg. A bit hacky, I know, but quite convenient (makes the rsyslog configuration straightforward) and very fast, since we know the RFC3164 parser is very quick and it runs on all messages anyway. No need for mmnormalize, unless you want to change the document in-flight with rsyslog.

Below you can find the Python code that can scan through existing documents in an index (or index pattern, like logstash_2016.05.*) and push them to rsyslog via TCP. You’ll need the Python Elasticsearch client (pip install elasticsearch) and you’d run it like this:

python elasticsearch_to_rsyslog.py source_index destination_index

The script being:

from elasticsearch import Elasticsearch

import json, socket, sys

source_cluster = ['server1', 'server2']

rsyslog_address = '127.0.0.1'

rsyslog_port = 5514

es = Elasticsearch(source_cluster,

retry_on_timeout=True,

max_retries=10)

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

s.connect((rsyslog_address, rsyslog_port))

result = es.search(index=sys.argv[1], scroll='1m', search_type='scan', size=500)

while True:

res = es.scroll(scroll_id=result['_scroll_id'], scroll='1m')

for hit in result['hits']['hits']:

s.send(sys.argv[2] + ' ' + hit["_type"] + ':' + json.dumps(hit["_source"])+'\n')

if not result['hits']['hits']:

break

s.close()

If you need to modify messages, you can parse them in rsyslog via mmjsonparse and then add/remove fields though rsyslog’s scripting language. Though I couldn’t find a nice way to change field names – for example to remove the dots that are forbidden since Elasticsearch 2.0 – so I did that in the Python script:

def de_dot(my_dict):

for key, value in my_dict.iteritems():

if '.' in key:

my_dict[key.replace('.','_')] = my_dict.pop(key)

if type(value) is dict:

my_dict[key] = de_dot(my_dict.pop(key))

return my_dict

And then the “send” line becomes:

s.send(sys.argv[2] + ' ' + hit["_type"] + ':' + json.dumps(de_dot(hit["_source"]))+'\n')

Configuring rsyslog

The first step here is to make sure you have the lastest rsyslog, though the config below works with versions all the way back to 7.x (which can be found in most Linux distributions). You just need to make sure the rsyslog-elasticsearch package is installed, because we need the Elasticsearch output module.

# messages bigger than this are truncated

$maxMessageSize 10000000 # ~10MB

# load the TCP input and the ES output modules

module(load="imtcp")

module(load="omelasticsearch")

main_queue(

# buffer up to 1M messages in memory

queue.size="1000000"

# these threads process messages and send them to Elasticsearch

queue.workerThreads="4"

# rsyslog processes messages in batches to avoid queue contention

# this will also be the Elasticsearch bulk size

queue.dequeueBatchSize="4000"

)

# we use templates to specify how the data sent to Elasticsearch looks like

template(name="document" type="list"){

# the "msg" variable contains the document

property(name="msg")

}

template(name="index" type="list"){

# "hostname" has the index name

property(name="hostname")

}

template(name="type" type="list"){

# "syslogtag" has the type name

property(name="syslogtag")

}

# start the TCP listener on the port we pointed the Python script to

input(type="imtcp" port="5514")

# sending data to Elasticsearch, using the templates defined earlier

action(type="omelasticsearch"

template="document"

dynSearchIndex="on" searchIndex="index"

dynSearchType="on" searchType="type"

server="localhost" # destination Elasticsearch host

serverport="9200" # and port

bulkmode="on" # use the bulk API

action.resumeretrycount="-1" # retry indefinitely if Elasticsearch is unreachable

)

This configuration doesn’t have to disturb your local syslog (i.e. by replacing /etc/rsyslog.conf). You can put it someplace else and run a different rsyslog process:

rsyslogd -i /var/run/rsyslog_reindexer.pid -f /home/me/rsyslog_reindexer.conf

And that’s it! With rsyslog started, you can start the Python script(s) and do the reindexing.

Monitoring rsyslog’s impstats with Kibana and SPM

Original post: Monitoring rsyslog with Kibana and SPM by @Sematext

A while ago we published this post where we explained how you can get stats about rsyslog, such as the number of messages enqueued, the number of output errors and so on. The point was to send them to Elasticsearch (or Logsene, our logging SaaS, which exposes the Elasticsearch API) in order to analyze them.

This is part 2 of that story, where we share how we process these stats in production. We’ll cover:

- an updated config, working with Elasticsearch 2.x

- what Kibana dashboards we have in Logsene to get an overview of what rsyslog is doing

- how we send some of these metrics to SPM as well, in order to set up alerts on their values: both threshold-based alerts and anomaly detection

Continue reading “Monitoring rsyslog’s impstats with Kibana and SPM”

Connecting with Logstash via Apache Kafka

Original post: Recipe: rsyslog + Kafka + Logstash by @Sematext

This recipe is similar to the previous rsyslog + Redis + Logstash one, except that we’ll use Kafka as a central buffer and connecting point instead of Redis. You’ll have more of the same advantages:

- rsyslog is light and crazy-fast, including when you want it to tail files and parse unstructured data (see the Apache logs + rsyslog + Elasticsearch recipe)

- Kafka is awesome at buffering things

- Logstash can transform your logs and connect them to N destinations with unmatched ease

There are a couple of differences to the Redis recipe, though:

- rsyslog already has Kafka output packages, so it’s easier to set up

- Kafka has a different set of features than Redis (trying to avoid flame wars here) when it comes to queues and scaling

As with the other recipes, I’ll show you how to install and configure the needed components. The end result would be that local syslog (and tailed files, if you want to tail them) will end up in Elasticsearch, or a logging SaaS like Logsene (which exposes the Elasticsearch API for both indexing and searching). Of course you can choose to change your rsyslog configuration to parse logs as well (as we’ve shown before), and change Logstash to do other things (like adding GeoIP info).

Getting the ingredients

First of all, you’ll probably need to update rsyslog. Most distros come with ancient versions and don’t have the plugins you need. From the official packages you can install:

- rsyslog. This will update the base package, including the file-tailing module

- rsyslog-kafka. This will get you the Kafka output module

If you don’t have Kafka already, you can set it up by downloading the binary tar. And then you can follow the quickstart guide. Basically you’ll have to start Zookeeper first (assuming you don’t have one already that you’d want to re-use):

bin/zookeeper-server-start.sh config/zookeeper.properties

And then start Kafka itself and create a simple 1-partition topic that we’ll use for pushing logs from rsyslog to Logstash. Let’s call it rsyslog_logstash:

bin/kafka-server-start.sh config/server.properties bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic rsyslog_logstash

Finally, you’ll have Logstash. At the time of writing this, we have a beta of 2.0, which comes with lots of improvements (including huge performance gains of the GeoIP filter I touched on earlier). After downloading and unpacking, you can start it via:

bin/logstash -f logstash.conf

Though you also have packages, in which case you’d put the configuration file in /etc/logstash/conf.d/ and start it with the init script.

Configuring rsyslog

With rsyslog, you’d need to load the needed modules first:

module(load="imuxsock") # will listen to your local syslog module(load="imfile") # if you want to tail files module(load="omkafka") # lets you send to Kafka

If you want to tail files, you’d have to add definitions for each group of files like this:

input(type="imfile" File="/opt/logs/example*.log" Tag="examplelogs" )

Then you’d need a template that will build JSON documents out of your logs. You would publish these JSON’s to Kafka and consume them with Logstash. Here’s one that works well for plain syslog and tailed files that aren’t parsed via mmnormalize:

template(name="json_lines" type="list" option.json="on") {

constant(value="{")

constant(value="\"timestamp\":\"")

property(name="timereported" dateFormat="rfc3339")

constant(value="\",\"message\":\"")

property(name="msg")

constant(value="\",\"host\":\"")

property(name="hostname")

constant(value="\",\"severity\":\"")

property(name="syslogseverity-text")

constant(value="\",\"facility\":\"")

property(name="syslogfacility-text")

constant(value="\",\"syslog-tag\":\"")

property(name="syslogtag")

constant(value="\"}")

}

By default, rsyslog has a memory queue of 10K messages and has a single thread that works with batches of up to 16 messages (you can find all queue parameters here). You may want to change:

– the batch size, which also controls the maximum number of messages to be sent to Kafka at once

– the number of threads, which would parallelize sending to Kafka as well

– the size of the queue and its nature: in-memory(default), disk or disk-assisted

In a rsyslog->Kafka->Logstash setup I assume you want to keep rsyslog light, so these numbers would be small, like:

main_queue( queue.workerthreads="1" # threads to work on the queue queue.dequeueBatchSize="100" # max number of messages to process at once queue.size="10000" # max queue size )

Finally, to publish to Kafka you’d mainly specify the brokers to connect to (in this example we have one listening to localhost:9092) and the name of the topic we just created:

action( broker=["localhost:9092"] type="omkafka" topic="rsyslog_logstash" template="json" )

Assuming Kafka is started, rsyslog will keep pushing to it.

Configuring Logstash

This is the part where we pick the JSON logs (as defined in the earlier template) and forward them to the preferred destinations. First, we have the input, which will use to the Kafka topic we created. To connect, we’ll point Logstash to Zookeeper, and it will fetch all the info about Kafka from there:

input {

kafka {

zk_connect => "localhost:2181"

topic_id => "rsyslog_logstash"

}

}

At this point, you may want to use various filters to change your logs before pushing to Logsene/Elasticsearch. For this last step, you’d use the Elasticsearch output:

output {

elasticsearch {

hosts => "localhost" # it used to be "host" pre-2.0

port => 9200

#ssl => "true"

#protocol => "http" # removed in 2.0

}

}

And that’s it! Now you can use Kibana (or, in the case of Logsene, either Kibana or Logsene’s own UI) to search your logs!