Using rsyslog to Reindex/Migrate Elasticsearch data

Original post: Scalable and Flexible Elasticsearch Reindexing via rsyslog by @Sematext

This recipe is useful in a two scenarios:

- migrating data from one Elasticsearch cluster to another (e.g. when you’re upgrading from Elasticsearch 1.x to 2.x or later)

- reindexing data from one index to another in a cluster pre 2.3. For clusters on version 2.3 or later, you can use the Reindex API

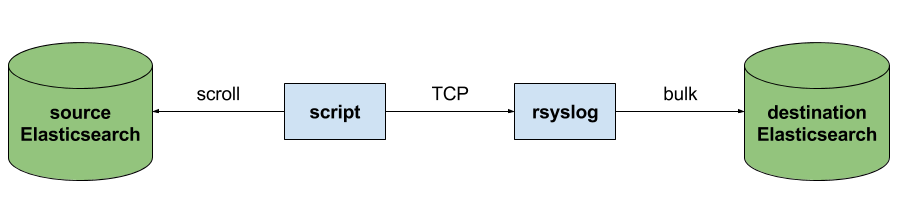

Back to the recipe, we used an external application to scroll through Elasticsearch documents in the source cluster and push them to rsyslog via TCP. Then we used rsyslog’s Elasticsearch output to push logs to the destination cluster. The overall flow would be:

This is an easy way to extend rsyslog, using whichever language you’re comfortable with, to support more inputs. Here, we piggyback on the TCP input. You can do a similar job with filters/parsers – you can find GeoIP implementations, for example – by piggybacking the mmexternal module, which uses stdout&stdin for communication. The same is possible for outputs, normally added via the omprog module: we did this to add a Solr output and one for SPM custom metrics.

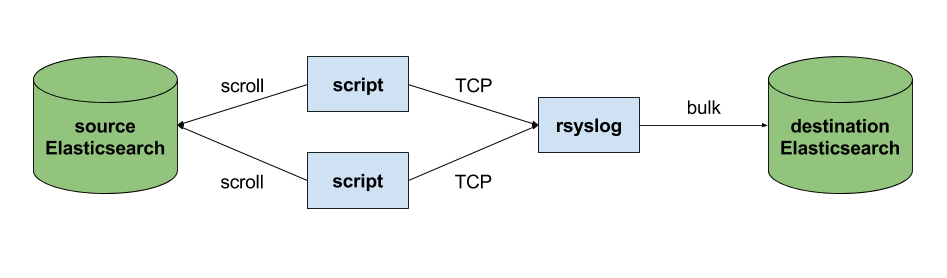

The custom script in question doesn’t have to be multi-threaded, you can simply spin up more of them, scrolling different indices. In this particular case, using two scripts gave us slightly better throughput, saturating the network:

Writing the custom script

Before starting to write the script, one needs to know how the messages sent to rsyslog would look like. To be able to index data, rsyslog will need an index name, a type name and optionally an ID. In this particular case, we were dealing with logs, so the ID wasn’t necessary.

With this in mind, I see a number of ways of sending data to rsyslog:

- one big JSON per line. One can use mmnormalize to parse that JSON, which then allows rsyslog do use values from within it as index name, type name, and so on

- for each line, begin with the bits of “extra data” (like index and type names) then put the JSON document that you want to reindex. Again, you can use mmnormalize to parse, but this time you can simply trust that the last thing is a JSON and send it to Elasticsearch directly, without the need to parse it

- if you only need to pass two variables (index and type name, in this case), you can piggyback on the vague spec of RFC3164 syslog and send something like

destination_index document_type:{"original": "document"}

This last option will parse the provided index name in the hostname variable, the type in syslogtag and the original document in msg. A bit hacky, I know, but quite convenient (makes the rsyslog configuration straightforward) and very fast, since we know the RFC3164 parser is very quick and it runs on all messages anyway. No need for mmnormalize, unless you want to change the document in-flight with rsyslog.

Below you can find the Python code that can scan through existing documents in an index (or index pattern, like logstash_2016.05.*) and push them to rsyslog via TCP. You’ll need the Python Elasticsearch client (pip install elasticsearch) and you’d run it like this:

python elasticsearch_to_rsyslog.py source_index destination_index

The script being:

from elasticsearch import Elasticsearch

import json, socket, sys

source_cluster = ['server1', 'server2']

rsyslog_address = '127.0.0.1'

rsyslog_port = 5514

es = Elasticsearch(source_cluster,

retry_on_timeout=True,

max_retries=10)

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

s.connect((rsyslog_address, rsyslog_port))

result = es.search(index=sys.argv[1], scroll='1m', search_type='scan', size=500)

while True:

res = es.scroll(scroll_id=result['_scroll_id'], scroll='1m')

for hit in result['hits']['hits']:

s.send(sys.argv[2] + ' ' + hit["_type"] + ':' + json.dumps(hit["_source"])+'\n')

if not result['hits']['hits']:

break

s.close()

If you need to modify messages, you can parse them in rsyslog via mmjsonparse and then add/remove fields though rsyslog’s scripting language. Though I couldn’t find a nice way to change field names – for example to remove the dots that are forbidden since Elasticsearch 2.0 – so I did that in the Python script:

def de_dot(my_dict):

for key, value in my_dict.iteritems():

if '.' in key:

my_dict[key.replace('.','_')] = my_dict.pop(key)

if type(value) is dict:

my_dict[key] = de_dot(my_dict.pop(key))

return my_dict

And then the “send” line becomes:

s.send(sys.argv[2] + ' ' + hit["_type"] + ':' + json.dumps(de_dot(hit["_source"]))+'\n')

Configuring rsyslog

The first step here is to make sure you have the lastest rsyslog, though the config below works with versions all the way back to 7.x (which can be found in most Linux distributions). You just need to make sure the rsyslog-elasticsearch package is installed, because we need the Elasticsearch output module.

# messages bigger than this are truncated

$maxMessageSize 10000000 # ~10MB

# load the TCP input and the ES output modules

module(load="imtcp")

module(load="omelasticsearch")

main_queue(

# buffer up to 1M messages in memory

queue.size="1000000"

# these threads process messages and send them to Elasticsearch

queue.workerThreads="4"

# rsyslog processes messages in batches to avoid queue contention

# this will also be the Elasticsearch bulk size

queue.dequeueBatchSize="4000"

)

# we use templates to specify how the data sent to Elasticsearch looks like

template(name="document" type="list"){

# the "msg" variable contains the document

property(name="msg")

}

template(name="index" type="list"){

# "hostname" has the index name

property(name="hostname")

}

template(name="type" type="list"){

# "syslogtag" has the type name

property(name="syslogtag")

}

# start the TCP listener on the port we pointed the Python script to

input(type="imtcp" port="5514")

# sending data to Elasticsearch, using the templates defined earlier

action(type="omelasticsearch"

template="document"

dynSearchIndex="on" searchIndex="index"

dynSearchType="on" searchType="type"

server="localhost" # destination Elasticsearch host

serverport="9200" # and port

bulkmode="on" # use the bulk API

action.resumeretrycount="-1" # retry indefinitely if Elasticsearch is unreachable

)

This configuration doesn’t have to disturb your local syslog (i.e. by replacing /etc/rsyslog.conf). You can put it someplace else and run a different rsyslog process:

rsyslogd -i /var/run/rsyslog_reindexer.pid -f /home/me/rsyslog_reindexer.conf

And that’s it! With rsyslog started, you can start the Python script(s) and do the reindexing.

Connecting with Logstash via Apache Kafka

Original post: Recipe: rsyslog + Kafka + Logstash by @Sematext

This recipe is similar to the previous rsyslog + Redis + Logstash one, except that we’ll use Kafka as a central buffer and connecting point instead of Redis. You’ll have more of the same advantages:

- rsyslog is light and crazy-fast, including when you want it to tail files and parse unstructured data (see the Apache logs + rsyslog + Elasticsearch recipe)

- Kafka is awesome at buffering things

- Logstash can transform your logs and connect them to N destinations with unmatched ease

There are a couple of differences to the Redis recipe, though:

- rsyslog already has Kafka output packages, so it’s easier to set up

- Kafka has a different set of features than Redis (trying to avoid flame wars here) when it comes to queues and scaling

As with the other recipes, I’ll show you how to install and configure the needed components. The end result would be that local syslog (and tailed files, if you want to tail them) will end up in Elasticsearch, or a logging SaaS like Logsene (which exposes the Elasticsearch API for both indexing and searching). Of course you can choose to change your rsyslog configuration to parse logs as well (as we’ve shown before), and change Logstash to do other things (like adding GeoIP info).

Getting the ingredients

First of all, you’ll probably need to update rsyslog. Most distros come with ancient versions and don’t have the plugins you need. From the official packages you can install:

- rsyslog. This will update the base package, including the file-tailing module

- rsyslog-kafka. This will get you the Kafka output module

If you don’t have Kafka already, you can set it up by downloading the binary tar. And then you can follow the quickstart guide. Basically you’ll have to start Zookeeper first (assuming you don’t have one already that you’d want to re-use):

bin/zookeeper-server-start.sh config/zookeeper.properties

And then start Kafka itself and create a simple 1-partition topic that we’ll use for pushing logs from rsyslog to Logstash. Let’s call it rsyslog_logstash:

bin/kafka-server-start.sh config/server.properties bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic rsyslog_logstash

Finally, you’ll have Logstash. At the time of writing this, we have a beta of 2.0, which comes with lots of improvements (including huge performance gains of the GeoIP filter I touched on earlier). After downloading and unpacking, you can start it via:

bin/logstash -f logstash.conf

Though you also have packages, in which case you’d put the configuration file in /etc/logstash/conf.d/ and start it with the init script.

Configuring rsyslog

With rsyslog, you’d need to load the needed modules first:

module(load="imuxsock") # will listen to your local syslog module(load="imfile") # if you want to tail files module(load="omkafka") # lets you send to Kafka

If you want to tail files, you’d have to add definitions for each group of files like this:

input(type="imfile" File="/opt/logs/example*.log" Tag="examplelogs" )

Then you’d need a template that will build JSON documents out of your logs. You would publish these JSON’s to Kafka and consume them with Logstash. Here’s one that works well for plain syslog and tailed files that aren’t parsed via mmnormalize:

template(name="json_lines" type="list" option.json="on") {

constant(value="{")

constant(value="\"timestamp\":\"")

property(name="timereported" dateFormat="rfc3339")

constant(value="\",\"message\":\"")

property(name="msg")

constant(value="\",\"host\":\"")

property(name="hostname")

constant(value="\",\"severity\":\"")

property(name="syslogseverity-text")

constant(value="\",\"facility\":\"")

property(name="syslogfacility-text")

constant(value="\",\"syslog-tag\":\"")

property(name="syslogtag")

constant(value="\"}")

}

By default, rsyslog has a memory queue of 10K messages and has a single thread that works with batches of up to 16 messages (you can find all queue parameters here). You may want to change:

– the batch size, which also controls the maximum number of messages to be sent to Kafka at once

– the number of threads, which would parallelize sending to Kafka as well

– the size of the queue and its nature: in-memory(default), disk or disk-assisted

In a rsyslog->Kafka->Logstash setup I assume you want to keep rsyslog light, so these numbers would be small, like:

main_queue( queue.workerthreads="1" # threads to work on the queue queue.dequeueBatchSize="100" # max number of messages to process at once queue.size="10000" # max queue size )

Finally, to publish to Kafka you’d mainly specify the brokers to connect to (in this example we have one listening to localhost:9092) and the name of the topic we just created:

action( broker=["localhost:9092"] type="omkafka" topic="rsyslog_logstash" template="json" )

Assuming Kafka is started, rsyslog will keep pushing to it.

Configuring Logstash

This is the part where we pick the JSON logs (as defined in the earlier template) and forward them to the preferred destinations. First, we have the input, which will use to the Kafka topic we created. To connect, we’ll point Logstash to Zookeeper, and it will fetch all the info about Kafka from there:

input {

kafka {

zk_connect => "localhost:2181"

topic_id => "rsyslog_logstash"

}

}

At this point, you may want to use various filters to change your logs before pushing to Logsene/Elasticsearch. For this last step, you’d use the Elasticsearch output:

output {

elasticsearch {

hosts => "localhost" # it used to be "host" pre-2.0

port => 9200

#ssl => "true"

#protocol => "http" # removed in 2.0

}

}

And that’s it! Now you can use Kibana (or, in the case of Logsene, either Kibana or Logsene’s own UI) to search your logs!

Recipe: Apache Logs + rsyslog (parsing) + Elasticsearch

Original post: Recipe: Apache Logs + rsyslog (parsing) + Elasticsearch by @Sematext

This recipe is about tailing Apache HTTPD logs with rsyslog, parsing them into structured JSON documents, and forwarding them to Elasticsearch (or a log analytics SaaS, like Logsene, which exposes the Elasticsearch API). Having them indexed in a structured way will allow you to do better analytics with tools like Kibana:

We’ll also cover pushing logs coming from the syslog socket and kernel, and how to buffer all of them properly. So this is quite a complete recipe for your centralized logging needs.

Getting the ingredients

Even though most distros already have rsyslog installed, it’s highly recommended to get the latest stable from the rsyslog repositories. The packages you’ll need are:

- rsyslog. The base package, including the file-tailing module (imfile)

- rsyslog-mmnormalize. This gives you mmnormalize, a module that will do the parsing of common Apache logs to JSON

- rsyslog-elasticsearch, for the Elasticsearch output

With the ingredients in place, let’s start cooking a configuration. The configuration needs to do the following:

- load the required modules

- configure inputs: tailing Apache logs and system logs

- configure the main queue to buffer your messages. This is also the place to define the number of worker threads and batch sizes (which will also be Elasticsearch bulk sizes)

- parse common Apache logs into JSON

- define a template where you’d specify how JSON messages would look like. You’d use this template to send logs to Logsene/Elasticsearch via the Elasticsearch output

Loading modules

Here, we’ll need imfile to tail files, mmnormalize to parse them, and omelasticsearch to send them. If you want to tail the system logs, you’d also need to include imuxsock and imklog (for kernel logs).

# system logs module(load="imuxsock") module(load="imklog") # file module(load="imfile") # parser module(load="mmnormalize") # sender module(load="omelasticsearch")

Configure inputs

For system logs, you typically don’t need any special configuration (unless you want to listen to a non-default Unix Socket). For Apache logs, you’d point to the file(s) you want to monitor. You can use wildcards for file names as well. You also need to specify a syslog tag for each input. You can use this tag later for filtering.

input(type="imfile"

File="/var/log/apache*.log"

Tag="apache:"

)NOTE: By default, rsyslog will not poll for file changes every N seconds. Instead, it will rely on the kernel (via inotify) to poke it when files get changed. This makes the process quite realtime and scales well, especially if you have many files changing rarely. Inotify is also less prone to bugs when it comes to file rotation and other events that would otherwise happen between two “polls”. You can still use the legacy mode=”polling” by specifying it in imfile’s module parameters.

Queue and workers

By default, all incoming messages go into a main queue. You can also separate flows (e.g. files and system logs) by using different rulesets but let’s keep it simple for now.

For tailing files, this kind of queue would work well:

main_queue( queue.workerThreads="4" queue.dequeueBatchSize="1000" queue.size="10000" )

This would be a small in-memory queue of 10K messages, which works well if Elasticsearch goes down, because the data is still in the file and rsyslog can stop tailing when the queue becomes full, and then resume tailing. 4 worker threads will pick batches of up to 1000 messages from the queue, parse them (see below) and send the resulting JSONs to Elasticsearch.

If you need a larger queue (e.g. if you have lots of system logs and want to make sure they’re not lost), I would recommend using a disk-assisted memory queue, that will spill to disk whenever it uses too much memory:

main_queue( queue.workerThreads="4" queue.dequeueBatchSize="1000" queue.highWatermark="500000" # max no. of events to hold in memory queue.lowWatermark="200000" # use memory queue again, when it's back to this level queue.spoolDirectory="/var/run/rsyslog/queues" # where to write on disk queue.fileName="stats_ruleset" queue.maxDiskSpace="5g" # it will stop at this much disk space queue.size="5000000" # or this many messages queue.saveOnShutdown="on" # save memory queue contents to disk when rsyslog is exiting )

Parsing with mmnormalize

The message normalization module uses liblognorm to do the parsing. So in the configuration you’d simply point rsyslog to the liblognorm rulebase:

action(type="mmnormalize" rulebase="/opt/rsyslog/apache.rb" )

where apache.rb will contain rules for parsing apache logs, that can look like this:

version=2 rule=:%clientip:word% %ident:word% %auth:word% [%timestamp:char-to:]%] "%verb:word% %request:word% HTTP/%httpversion:float%" %response:number% %bytes:number% "%referrer:char-to:"%" "%agent:char-to:"%"%blob:rest%

Where version=2 indicates that rsyslog should use liblognorm’s v2 engine (which is was introduced in rsyslog 8.13) and then you have the actual rule for parsing logs. You can find more details about configuring those rules in the liblognorm documentation.

Besides parsing Apache logs, creating new rules typically requires a lot of trial and error. To check your rules without messing with rsyslog, you can use the lognormalizer binary like:

head -1 /path/to/log.file | /usr/lib/lognorm/lognormalizer -r /path/to/rulebase.rb -e json

NOTE: If you’re used to Logstash’s grok, this kind of parsing rules will look very familiar. However, things are quite different under the hood. Grok is a nice abstraction over regular expressions, while liblognorm builds parse trees out of specialized parsers. This makes liblognorm much faster, especially as you add more rules. In fact, it scales so well, that for all practical purposes, performance depends on the length of the log lines and not on the number of rules. This post explains the theory behind this assuption, and this is actually proven by various tests. The downside is that you’ll lose some of the flexibility offered by regular expressions. You can still use regular expressions with liblognorm (you’d need to set allow_regex to on when loading mmnormalize) but then you’d lose a lot of the benefits that come with the parse tree approach.

Template for parsed logs

Since we want to push logs to Elasticsearch as JSON, we’d need to use templates to format them. For Apache logs, by the time parsing ended, you already have all the relevant fields in the $!all-json variable, that you’ll use as a template:

template(name="all-json" type="list"){

property(name="$!all-json")

}Template for time-based indices

For the logging use-case, you’d probably want to use time-based indices (e.g. if you keep your logs for 7 days, you can have one index per day). Such a design will give your cluster a lot more capacity due to the way Elasticsearch merges data in the background (you can learn the details in our presentations at GeeCON and Berlin Buzzwords).

To make rsyslog use daily or other time-based indices, you need to define a template that builds an index name off the timestamp of each log. This is one that names them logstash-YYYY.MM.DD, like Logstash does by default:

template(name="logstash-index"

type="list") {

constant(value="logstash-")

property(name="timereported" dateFormat="rfc3339" position.from="1" position.to="4")

constant(value=".")

property(name="timereported" dateFormat="rfc3339" position.from="6" position.to="7")

constant(value=".")

property(name="timereported" dateFormat="rfc3339" position.from="9" position.to="10")

}And then you’d use this template in the Elasticsearch output:

action(type="omelasticsearch" template="all-json" dynSearchIndex="on" searchIndex="logstash-index" searchType="apache" server="MY-ELASTICSEARCH-SERVER" bulkmode="on" action.resumeretrycount="-1" )

Putting both Apache and system logs together

If you use the same rsyslog to parse system logs, mmnormalize won’t parse them (because they don’t match Apache’s common log format). In this case, you’ll need to pick the rsyslog properties you want and build an additional JSON template:

template(name="plain-syslog"

type="list") {

constant(value="{")

constant(value="\"timestamp\":\"") property(name="timereported" dateFormat="rfc3339")

constant(value="\",\"host\":\"") property(name="hostname")

constant(value="\",\"severity\":\"") property(name="syslogseverity-text")

constant(value="\",\"facility\":\"") property(name="syslogfacility-text")

constant(value="\",\"tag\":\"") property(name="syslogtag" format="json")

constant(value="\",\"message\":\"") property(name="msg" format="json")

constant(value="\"}")

}Then you can make rsyslog decide: if a log was parsed successfully, use the all-json template. If not, use the plain-syslog one:

if $parsesuccess == "OK" then {

action(type="omelasticsearch"

template="all-json"

...

)

} else {

action(type="omelasticsearch"

template="plain-syslog"

...

)

}And that’s it! Now you can restart rsyslog and get both your system and Apache logs parsed, buffered and indexed into Elasticsearch. If you’re a Logsene user, the recipe is a bit simpler: you’d follow the same steps, except that you’ll skip the logstash-index template (Logsene does that for you) and your Elasticsearch actions will look like this:

action(type="omelasticsearch" template="all-json or plain-syslog" searchIndex="LOGSENE-APP-TOKEN-GOES-HERE" searchType="apache" server="logsene-receiver.sematext.com" serverport="80" bulkmode="on" action.resumeretrycount="-1" )

Coupling with Logstash via Redis

Original post: Recipe: rsyslog + Redis + Logstash by @Sematext

OK, so you want to hook up rsyslog with Logstash. If you don’t remember why you want that, let me give you a few hints:

- Logstash can do lots of things, it’s easy to set up but tends to be too heavy to put on every server

- you have Redis already installed so you can use it as a centralized queue. If you don’t have it yet, it’s worth a try because it’s very light for this kind of workload.

- you have rsyslog on pretty much all your Linux boxes. It’s light and surprisingly capable, so why not make it push to Redis in order to hook it up with Logstash?

In this post, you’ll see how to install and configure the needed components so you can send your local syslog (or tail files with rsyslog) to be buffered in Redis so you can use Logstash to ship them to Elasticsearch, a logging SaaS like Logsene (which exposes the Elasticsearch API for both indexing and searching) so you can search and analyze them with Kibana:

Tutorial: Sending impstats Metrics to Elasticsearch Using Rulesets and Queues

Originally posted on the Sematext blog: Monitoring rsyslog’s Performance with impstats and Elasticsearch

If you’re using rsyslog for processing lots of logs (and, as we’ve shown before, rsyslog is good at processing lots of logs), you’re probably interested in monitoring it. To do that, you can use impstats, which comes from input module for process stats. impstats produces information like:

– input stats, like how many events went through each input

– queue stats, like the maximum size of a queue

– action (output or message modification) stats, like how many events were forwarded by each action

– general stats, like CPU time or memory usage

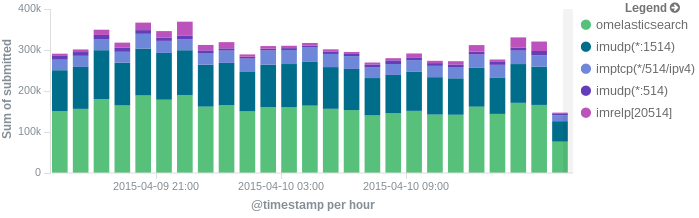

In this post, we’ll show you how to send those stats to Elasticsearch (or Logsene — essentially hosted ELK, our log analytics service, that exposes the Elasticsearch API), where you can explore them with a nice UI, like Kibana. For example get the number of logs going through each input/output per hour:

More precisely, we’ll look at:

– useful options around impstats

– how to use those stats and what they’re about

– how to ship stats to Elasticsearch/Logsene by using rsyslog’s Elasticsearch output

– how to do this shipping in a fast and reliable way. This will apply to most rsyslog use-cases, not only impstats

Continue reading “Tutorial: Sending impstats Metrics to Elasticsearch Using Rulesets and Queues”

rsyslog and ElasticSearch

by Micah Yoder, originally published on rackspace. Minor changes through Adiscon.

There is a clear benefit to being able to aggregate logs from various servers and services into one place and be able to search them for any sort of arbitrary event. Traditional syslog can aggregate logs, but aggregating events from them sometimes involves grep and convoluted regular expressions. Logging structured data to a database makes a lot of sense. rsyslog and ElasticSearch can do that, but figuring out how to get it to work from the rsyslog documentation can be difficult. Let’s start from the beginning.

First, you need the newest stable rsyslog, 7.4.x. The older 7.2 won’t cut it. You need the plug-ins mmnormalize and omelasticsearch, both of which are available from rsyslog’s yum repositories for RHEL/CentOS. mmnormalize requires some packages from EPEL so that will need to be added to the server as well.

Let’s walk through this from the beginning, starting with the most basic rsyslog configuration:

module(load="imuxsock") # provides support for local system logging (e.g. via logger command)

module(load="imklog") # provides kernel logging support (previously done by rklogd)

$ActionFileDefaultTemplate RSYSLOG_TraditionalFileFormat

*.* /var/log/messages

This simply loads the necessary modules for basic logging operation, sets the normal log format, and sends everything to /var/log/messages. With this if you issue this command:

# logger 'hello world!'

You get this in /var/log/messages:

Mar 27 14:16:47 micahsyslog root: hello world!

There are several parts here: the date/time, the hostname, the syslog tag or program (root), and the message. Another example:

# service sshd restart

yields:

Mar 27 14:19:27 micahsyslog sshd[23295]: Received signal 15; terminating.

Mar 27 14:19:27 micahsyslog sshd[16602]: Server listening on 0.0.0.0 port 22.

Mar 27 14:19:27 micahsyslog sshd[16602]: Server listening on :: port 22.

By the way, if we remove the ActionFileDefaultTemplate directive, we get the default line with an ISO time, which is nicer for automatic processing but a bit less readable for humans:

2013-03-27T21:51:29.139796+00:00 micahsyslog root: Hello world #2!

That is good to know, but actually irrelevant for putting data into ElasticSearch. If you also log to text files, you can decide whether to use the ISO time or the more human readable time.

Now let’s try to get it to normalize messages from the log! For this example we will need rsyslog running with ActionFileDefaultTemplate directive (keep it in for now, but you can remove it later if you want).

Create a rulebase file, which will be used by liblognorm. liblognorm is a library written by Rainer Gerhards, the author of rsyslog, which parses log messages according to a pattern and extracts variables from them. We can create the file as /etc/rsyslog.d/rules.rb and put in these contents:

prefix=%date:date-rfc3164% %hostname:word% %tag:word%

rule=user: new user: name=%user:char-to:,%, UID=%uid:number%, GID=%gid:number%, home=%home:char-to:,%, shell=%shell:word%

rule=test: Hello %x:number%

Now if we log something:

# logger “Hello 55”

We should be able to get something like this:

# lognormalizer -r rsyslog.d/rules.rb -t test < /var/log/messages

[cee@115 event.tags="test" x="55" tag="root:" hostname="micahsyslog" date="Mar 27 22:53:30"]

See what happened? The lognormalizer command, which uses liblognorm, was able to pull out the number in the log as variable “x” according to our “test” rule.

A short description of the rule base is in order. The prefix line contains a prefix that will be applied to all following rules. A rule is defined by a line beginning with the word “rule”, followed by an “=” sign, an optional tag, then a “:”. A space should then follow – but the space is actually part of the rule! There will be a space after the prefix, so it needs to be there.

Documentation on the rulebase file can be found here: http://www.liblognorm.com/files/manual/index.html Then navigate to How to use liblognorm → Rulebase. This will show you all the directives you can use.

Now we need to get the data into ElasticSearch. First we need to install it, which is very simple (at least for a single test node). Just extract its tarball, change into the base directory, and run:

# bin/elasticsearch -f

The -f causes it to run in the foreground so you can see what is going on.

We then add this to rsyslog.conf:

module(load="mmnormalize")

module(load="omelasticsearch")

template(name="test" type="subtree" subtree="$!")

set $!fac = $syslogfacility;

set $!host = $hostname;

set $!sev = $syslogseverity;

set $!time = $timereported;

set $!tgen = $timegenerated;

set $!tag = $syslogtag;

set $!prog = $programname;

action(type="omelasticsearch" server="localhost" template="test")

So what is all this? First we load the modules to normalize the log messages and for export to ElasticSearch. These should be placed near the top of your rsyslog.conf file with any other includes.

The template statement tells which part of the CEE data should be sent to ElasticSearch. Here a brief explanation of the data structure is necessary.

JSON (the format for CEE logging data and the native format for ElasticSearch) is a hierarchical data structure. The root of the data structure in rsyslog is $! – something like the ‘{}’ in a JSON document. Assigning to $!data1 would put a value into the “data1” top level JSON element {“data”: “…”}. Trees can be built. If you assign to $!tree1!child1, you will get a JSON document like this: {“tree1: {“child1”: “…”}}

This rsyslog document lists the available properties that you can use to populate these CEE variables.

As of version 7.5.8, 7.6.0 and 8.1.4, not only the standard rsyslog properties and constants can be assigned to these variables. There is now a way to assign the result of an rsyslog template, which allows more complex values. That is what you need to put a sortable timestamp into the document. More information can be found in the guide to use “set variable and exec_template“.

As this was not available at the time of writing I needed to get around this limitation (sort of), so I created an ElasticSearch index with a timestamp. Here is the command I used:

curl -XPUT http://elasticsearch.hostname:9200/logs -d '{"mappings":{"events":{"_timestamp":{"enabled": true, "store": "yes"},"prog":{"store":"yes"},"host":{"store":"yes"}}}}'

This also “stores” the host and prog syslog fields, which should help with querying based on the host or program. This will create an index called “logs”. ElasticSearch by default inserts events into the “system” index so you will want to specify the index name in your omelasticsearch line in rsyslog.conf (or one of its includes):

action(type="omelasticsearch" server="elasticsearch.hostname" template="cee_template_name" searchIndex="logs" bulkmode="on")

We also enabled bulk mode, which allows rsyslog to send many events at once to ElasticSearch. This will greatly improve performance.

Now you can query it!

curl 'http://elasticsearch.hostname:9200/logs/_search?pretty=1&fields=_source,_timestamp&size=100' -d '{"sort":{"_timestamp": "desc"}}' | less

The “pretty=1” and piping it to less are simply there to pretty print the JSON result and make it easy for you to browse the data set. A real search program, of course, would not turn on pretty mode and the code would directly consume the JSON.

You can add parameters after the ‘size=100’ (which, of course, says to return the 100 most recent results).

- &q=word will search the logs for that word in any field.

- &q=prog:name will search for ‘name’ in the syslog program field. Examples: postfix, sudo, crontab

- &q=host:hostname will find events for that hostname. Just use the base hostname, not a FQDN.

And that should get you started! Hopefully this is helpful.

Storing and forwarding remote messages

In this scenario, we want to store remote sent messages into a specific local file and forward the received messages to another syslog server. Local messages should still be locally stored.

Things to think about

How should this work out? Basically, we need a syslog listener for TCP and one for UDP, the local logging service and two rulesets, one for the local logging and one for the remote logging.

TCP recpetion is not a build-in capability. You need to load the imtcp plugin in order to enable it. This needs to be done only once in rsyslog.conf. Do it right at the top.

Note that the server port address specified in $InputTCPServerRun must match the port address that the clients send messages to.

Config Statements

# Modules

$ModLoad imtcp $ModLoad imudp $ModLoad imuxsock $ModLoad imklog

# Templates

# log every host in its own directory $template RemoteHost,"/var/syslog/hosts/%HOSTNAME%/%$YEAR%/%$MONTH%/%$DAY%/syslog.log"

### Rulesets

# Local Logging $RuleSet local kern.* /var/log/messages *.info;mail.none;authpriv.none;cron.none /var/log/messages authpriv.* /var/log/secure mail.* -/var/log/maillog cron.* /var/log/cron *.emerg * uucp,news.crit /var/log/spooler local7.* /var/log/boot.log # use the local RuleSet as default if not specified otherwise $DefaultRuleset local # Remote Logging $RuleSet remote *.* ?RemoteHost # Send messages we receive to Gremlin *.* @@W.X.Y.Z:514

### Listeners

# bind ruleset to tcp listener $InputTCPServerBindRuleset remote # and activate it: $InputTCPServerRun 10514 $InputUDPServerBindRuleset remote $UDPServerRun 514

How it works

The configuration basically works in 4 parts. First, we load all the modules (imtcp, imudp, imuxsock, imklog). Then we specify the templates for creating files. The we create the rulesets which we can use for the different receivers. And last we set the listeners.

The rulesets are somewhat interesting to look at. The ruleset “local” will be set as the default ruleset. That means, that it will be used by any listener if it is not specified otherwise. Further, this ruleset uses the default log paths vor various facilities and severities.

The ruleset “remote” on the other hand takes care of the local logging and forwarding of all log messages that are received either via UDP or TCP. First, all the messages will be stored in a local file. The filename will be generated with the help of the template at the beginning of our configuration (in our example a rather complex folder structure will be used). After logging into the file, all the messages will be forwarded to another syslog server via TCP.

In the last part of the configuration we set the syslog listeners. We first bind the listener to the ruleset “remote”, then we give it the directive to run the listener with the port to use. In our case we use 10514 for TCP and 514 for UDP.

Important

There are some tricks in this configuration. Since we are actively using the rulesets, we must specify those rulesets before being able to bind them to a listener. That means, the order in the configuration is somewhat different than usual. Usually we would put the listener commands on top of the configuration right after the modules. Now we need to specify the rulesets first, then set the listeners (including the bind command). This is due to the current configuration design of rsyslog. To bind a listener to a ruleset, the ruleset object must at least be present before the listener is created. And that is why we need this kind of order for our configuration.

Using the Text File Input Module

Log files should be processed by rsyslog. Here is some information on how the file monitor works. This will only describe setting up the Text File Input Module. Further configuration like processing rules or output methods will not be described.

Things to think about

The configuration given here should be placed on top of the rsyslog.conf file.

Config Statements

module(load="imfile" PollingInterval="10") # needs to be done just once. PollingInterval is a module directive and is only set once when loading the module

# File 1 input(type="imfile" File="/path/to/file1" Tag="tag1" StateFile="/var/spool/rsyslog/statefile1" Severity="error" Facility="local7") # File 2 input(type="imfile" File="/path/to/file2" Tag="tag2" StateFile="/var/spool/rsyslog/statefile2") # ... and so on ... #

How it works

The configuration for using the Text File Input Module is very extensive. At the beginning of your rsyslog configuration file, you always load the modules. There you need to load the module for Text File Input as well. Like all other modules, this has to be made just once. Please note that the directive PollingInterval is a module directive which needs to be set when loading the module.

module(load="imfile" PollingInterval="10")

Next up comes the input and its parameters. We configure a input of a certain type and then set the parameters to be used by this input. This is basically the same principle for all inputs:

# File 1 input(type="imfile" File="/path/to/file1" Tag="tag1" StateFile="/var/spool/rsyslog/statefile1" Severity="error" Facility="local7")

File specifies, the path and name of the text file that should be monitored. The file name must be absolute.

Tag will set a tag in front of each message pulled from the file. If you want a colon after the tag you must set it as well, it will not be added automatically.

StateFile will create a file where rsyslog keeps track of the position it currently is in a file. You only need to set the filename. This file always is created in the rsyslog working directory (configurable via $WorkDirectory). This file is important so rsyslog will not pull messages from the beginning of the file when being restarted.

Severity will give all log messages of a file the same severity. This is optional. By default all mesages will be set to “notice”.

Facility gives alle log messages of a file the same facility. Again, this is optional. By default all messages will be set to “local0”.

These statements are needed for monitoring a file. There are other statements described in the doc, which you might want to use. If you want to monitor another file the statements must be repeated.

Since the files cannot be monitored in genuine real time (which generates too much processing effort) you need to set a polling interval:

PollingInterval 10

This is a module setting and it defines the interval in which the log files will be polled. By default this value is set to 10 seconds. If you want this to get more near realtime, you can decrease the value, though this is not suggested due to increasing processing load. Setting this to 0 is supported, but not suggested. Rsyslog will continue reading the file as long as there are unprocessed messages in it. The interval only takes effect once rsyslog reaches the end of the file.

Important

The StateFile needs to be unique for every file that is monitored. If not, strange things could happen.

On the Use of English

I ventured to write this book in English because …

it will be more easily read in poor English,

than in good German by 90% of my intended readers.

— HANS J. STETTER, Analysis of Discretization Methods for

Ordinary Differential Equations (1973)

There is not much I could add to Mr. Stetter’s thought, except, maybe, that the number to quote probably tends more to 99% in this case than to the 90% Mr. Stetter notes. So please pardon those errors in language use that I have not yet been able to fix or even see. Suggestions for corrections and improvements are always welcome.

What this book is about

This book offers a cookbook-approach to configuring rsyslog. While the official documentation focusses on concepts, components and configuration statements, this book takes a completely different approach. It will not tell you about rsyslog concepts. Instead, it will offer a wide-range of recipies for configuring rsyslog so that it performs some specific task.

The individual recipies are presented with a problem description, some key facts (if necessary), required rsyslog version and the necessary configuration statements. These statements do their job, but not more. I tried to make the configurations reusable and free of side-effects. So you should be able to combine some of these recipies to form a final configuration that works exactly like you need it – just from applying the recipies. The core idea is that this should work just like in real cooking – there, you combine several recipies to create a great five-course meal. However, in cooking there are some recipies that you usually can not combine well within a single meal. The rsyslog equivalent is configurations with side effects, which may not always avoidable. If one of the configurations here has side-effects, you will be warned. It then probably is better to think twice before combining it.