Coupling with Logstash via Redis

Original post: Recipe: rsyslog + Redis + Logstash by @Sematext

OK, so you want to hook up rsyslog with Logstash. If you don’t remember why you want that, let me give you a few hints:

- Logstash can do lots of things, it’s easy to set up but tends to be too heavy to put on every server

- you have Redis already installed so you can use it as a centralized queue. If you don’t have it yet, it’s worth a try because it’s very light for this kind of workload.

- you have rsyslog on pretty much all your Linux boxes. It’s light and surprisingly capable, so why not make it push to Redis in order to hook it up with Logstash?

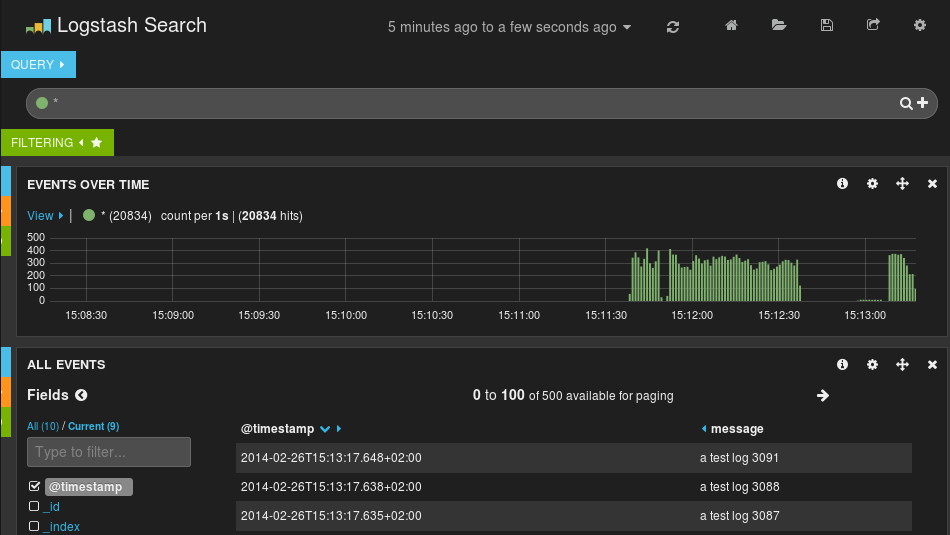

In this post, you’ll see how to install and configure the needed components so you can send your local syslog (or tail files with rsyslog) to be buffered in Redis so you can use Logstash to ship them to Elasticsearch, a logging SaaS like Logsene (which exposes the Elasticsearch API for both indexing and searching) so you can search and analyze them with Kibana:

Tutorial: Sending impstats Metrics to Elasticsearch Using Rulesets and Queues

Originally posted on the Sematext blog: Monitoring rsyslog’s Performance with impstats and Elasticsearch

If you’re using rsyslog for processing lots of logs (and, as we’ve shown before, rsyslog is good at processing lots of logs), you’re probably interested in monitoring it. To do that, you can use impstats, which comes from input module for process stats. impstats produces information like:

– input stats, like how many events went through each input

– queue stats, like the maximum size of a queue

– action (output or message modification) stats, like how many events were forwarded by each action

– general stats, like CPU time or memory usage

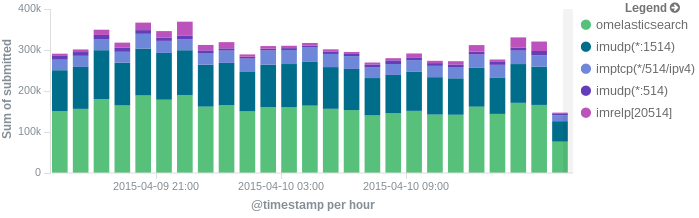

In this post, we’ll show you how to send those stats to Elasticsearch (or Logsene — essentially hosted ELK, our log analytics service, that exposes the Elasticsearch API), where you can explore them with a nice UI, like Kibana. For example get the number of logs going through each input/output per hour:

More precisely, we’ll look at:

– useful options around impstats

– how to use those stats and what they’re about

– how to ship stats to Elasticsearch/Logsene by using rsyslog’s Elasticsearch output

– how to do this shipping in a fast and reliable way. This will apply to most rsyslog use-cases, not only impstats

Continue reading “Tutorial: Sending impstats Metrics to Elasticsearch Using Rulesets and Queues”

rsyslog 8.3.1 (v8-devel) released

We have just released 8.3.1 of the v8-devel branch.

This release provides some improvements for external message modification modules, a module to rewrite message facility and severity as well as bug fixes. It is a recommended update for all v8.3 users.

ChangeLog:

http://www.rsyslog.com/changelog-for-8-3-1-v8-devel/

Download:

http://www.rsyslog.com/download-v8-devel/

As always, feedback is appreciated.

Best regards,

Florian Riedl

Changelog for 8.3.1 (v8-devel)

Version 8.3.1 [v8-devel] 2014-04-24

- external message modification interface now support modifying message PRI

- “jsonmesg” property will include uuid only if one was previously generated

This is primarily a performance optimization. Whenever the message uuid is gotten, it is generated when not already present. As we used the regular setter, this means that always the uuid was generated, which is quite time-consuming. This has now been changed so that it only is generated if it already exists. That also matches more closly the semantics, as “jsonmesg” should not make modifications to the message.

Note that the same applies to “fulljson” passing mode for external plugins. - added plugin to rewrite message facility and/or severity

Name: fac-sever-rewrite.py - permits to build against json-c 0.12

Unfortunately, json-c had an ABI breakage, so this is necessary. Note that versions prior to 0.12 had security issues (CVE-2013-6370, CVE-2013-6371) and so it is desirable to link against the new version.

Thanks to Thomas D. for the patch.

Note that at least some distros have fixed the security issue in older versions of json-c, so this seems to apply mostly when building from sources. - bugfix: using UUID property could cause segfault

- bugfix/mmexternal: memory leak

- bugfix: memory leak when using “jsonmesg” property

- bugfix: mmutf8fix did not detect two invalid sequences

Thanks to Axel Rau for the patch. - bugfix: build problems with lexer.l on some platforms

For some reason, the strdup() prototype and others are missing. I admit that I don’t know why, as this happens only in 8.3.0+ and there is no indication of changes to the affected files. In any case, we need to fix this, and the current solution works at least as an interim one.