Recipe: Apache Logs + rsyslog (parsing) + Elasticsearch

Original post: Recipe: Apache Logs + rsyslog (parsing) + Elasticsearch by @Sematext

This recipe is about tailing Apache HTTPD logs with rsyslog, parsing them into structured JSON documents, and forwarding them to Elasticsearch (or a log analytics SaaS, like Logsene, which exposes the Elasticsearch API). Having them indexed in a structured way will allow you to do better analytics with tools like Kibana:

We’ll also cover pushing logs coming from the syslog socket and kernel, and how to buffer all of them properly. So this is quite a complete recipe for your centralized logging needs.

Getting the ingredients

Even though most distros already have rsyslog installed, it’s highly recommended to get the latest stable from the rsyslog repositories. The packages you’ll need are:

- rsyslog. The base package, including the file-tailing module (imfile)

- rsyslog-mmnormalize. This gives you mmnormalize, a module that will do the parsing of common Apache logs to JSON

- rsyslog-elasticsearch, for the Elasticsearch output

With the ingredients in place, let’s start cooking a configuration. The configuration needs to do the following:

- load the required modules

- configure inputs: tailing Apache logs and system logs

- configure the main queue to buffer your messages. This is also the place to define the number of worker threads and batch sizes (which will also be Elasticsearch bulk sizes)

- parse common Apache logs into JSON

- define a template where you’d specify how JSON messages would look like. You’d use this template to send logs to Logsene/Elasticsearch via the Elasticsearch output

Loading modules

Here, we’ll need imfile to tail files, mmnormalize to parse them, and omelasticsearch to send them. If you want to tail the system logs, you’d also need to include imuxsock and imklog (for kernel logs).

# system logs module(load="imuxsock") module(load="imklog") # file module(load="imfile") # parser module(load="mmnormalize") # sender module(load="omelasticsearch")

Configure inputs

For system logs, you typically don’t need any special configuration (unless you want to listen to a non-default Unix Socket). For Apache logs, you’d point to the file(s) you want to monitor. You can use wildcards for file names as well. You also need to specify a syslog tag for each input. You can use this tag later for filtering.

input(type="imfile"

File="/var/log/apache*.log"

Tag="apache:"

)NOTE: By default, rsyslog will not poll for file changes every N seconds. Instead, it will rely on the kernel (via inotify) to poke it when files get changed. This makes the process quite realtime and scales well, especially if you have many files changing rarely. Inotify is also less prone to bugs when it comes to file rotation and other events that would otherwise happen between two “polls”. You can still use the legacy mode=”polling” by specifying it in imfile’s module parameters.

Queue and workers

By default, all incoming messages go into a main queue. You can also separate flows (e.g. files and system logs) by using different rulesets but let’s keep it simple for now.

For tailing files, this kind of queue would work well:

main_queue( queue.workerThreads="4" queue.dequeueBatchSize="1000" queue.size="10000" )

This would be a small in-memory queue of 10K messages, which works well if Elasticsearch goes down, because the data is still in the file and rsyslog can stop tailing when the queue becomes full, and then resume tailing. 4 worker threads will pick batches of up to 1000 messages from the queue, parse them (see below) and send the resulting JSONs to Elasticsearch.

If you need a larger queue (e.g. if you have lots of system logs and want to make sure they’re not lost), I would recommend using a disk-assisted memory queue, that will spill to disk whenever it uses too much memory:

main_queue( queue.workerThreads="4" queue.dequeueBatchSize="1000" queue.highWatermark="500000" # max no. of events to hold in memory queue.lowWatermark="200000" # use memory queue again, when it's back to this level queue.spoolDirectory="/var/run/rsyslog/queues" # where to write on disk queue.fileName="stats_ruleset" queue.maxDiskSpace="5g" # it will stop at this much disk space queue.size="5000000" # or this many messages queue.saveOnShutdown="on" # save memory queue contents to disk when rsyslog is exiting )

Parsing with mmnormalize

The message normalization module uses liblognorm to do the parsing. So in the configuration you’d simply point rsyslog to the liblognorm rulebase:

action(type="mmnormalize" rulebase="/opt/rsyslog/apache.rb" )

where apache.rb will contain rules for parsing apache logs, that can look like this:

version=2 rule=:%clientip:word% %ident:word% %auth:word% [%timestamp:char-to:]%] "%verb:word% %request:word% HTTP/%httpversion:float%" %response:number% %bytes:number% "%referrer:char-to:"%" "%agent:char-to:"%"%blob:rest%

Where version=2 indicates that rsyslog should use liblognorm’s v2 engine (which is was introduced in rsyslog 8.13) and then you have the actual rule for parsing logs. You can find more details about configuring those rules in the liblognorm documentation.

Besides parsing Apache logs, creating new rules typically requires a lot of trial and error. To check your rules without messing with rsyslog, you can use the lognormalizer binary like:

head -1 /path/to/log.file | /usr/lib/lognorm/lognormalizer -r /path/to/rulebase.rb -e json

NOTE: If you’re used to Logstash’s grok, this kind of parsing rules will look very familiar. However, things are quite different under the hood. Grok is a nice abstraction over regular expressions, while liblognorm builds parse trees out of specialized parsers. This makes liblognorm much faster, especially as you add more rules. In fact, it scales so well, that for all practical purposes, performance depends on the length of the log lines and not on the number of rules. This post explains the theory behind this assuption, and this is actually proven by various tests. The downside is that you’ll lose some of the flexibility offered by regular expressions. You can still use regular expressions with liblognorm (you’d need to set allow_regex to on when loading mmnormalize) but then you’d lose a lot of the benefits that come with the parse tree approach.

Template for parsed logs

Since we want to push logs to Elasticsearch as JSON, we’d need to use templates to format them. For Apache logs, by the time parsing ended, you already have all the relevant fields in the $!all-json variable, that you’ll use as a template:

template(name="all-json" type="list"){

property(name="$!all-json")

}Template for time-based indices

For the logging use-case, you’d probably want to use time-based indices (e.g. if you keep your logs for 7 days, you can have one index per day). Such a design will give your cluster a lot more capacity due to the way Elasticsearch merges data in the background (you can learn the details in our presentations at GeeCON and Berlin Buzzwords).

To make rsyslog use daily or other time-based indices, you need to define a template that builds an index name off the timestamp of each log. This is one that names them logstash-YYYY.MM.DD, like Logstash does by default:

template(name="logstash-index"

type="list") {

constant(value="logstash-")

property(name="timereported" dateFormat="rfc3339" position.from="1" position.to="4")

constant(value=".")

property(name="timereported" dateFormat="rfc3339" position.from="6" position.to="7")

constant(value=".")

property(name="timereported" dateFormat="rfc3339" position.from="9" position.to="10")

}And then you’d use this template in the Elasticsearch output:

action(type="omelasticsearch" template="all-json" dynSearchIndex="on" searchIndex="logstash-index" searchType="apache" server="MY-ELASTICSEARCH-SERVER" bulkmode="on" action.resumeretrycount="-1" )

Putting both Apache and system logs together

If you use the same rsyslog to parse system logs, mmnormalize won’t parse them (because they don’t match Apache’s common log format). In this case, you’ll need to pick the rsyslog properties you want and build an additional JSON template:

template(name="plain-syslog"

type="list") {

constant(value="{")

constant(value="\"timestamp\":\"") property(name="timereported" dateFormat="rfc3339")

constant(value="\",\"host\":\"") property(name="hostname")

constant(value="\",\"severity\":\"") property(name="syslogseverity-text")

constant(value="\",\"facility\":\"") property(name="syslogfacility-text")

constant(value="\",\"tag\":\"") property(name="syslogtag" format="json")

constant(value="\",\"message\":\"") property(name="msg" format="json")

constant(value="\"}")

}Then you can make rsyslog decide: if a log was parsed successfully, use the all-json template. If not, use the plain-syslog one:

if $parsesuccess == "OK" then {

action(type="omelasticsearch"

template="all-json"

...

)

} else {

action(type="omelasticsearch"

template="plain-syslog"

...

)

}And that’s it! Now you can restart rsyslog and get both your system and Apache logs parsed, buffered and indexed into Elasticsearch. If you’re a Logsene user, the recipe is a bit simpler: you’d follow the same steps, except that you’ll skip the logstash-index template (Logsene does that for you) and your Elasticsearch actions will look like this:

action(type="omelasticsearch" template="all-json or plain-syslog" searchIndex="LOGSENE-APP-TOKEN-GOES-HERE" searchType="apache" server="logsene-receiver.sematext.com" serverport="80" bulkmode="on" action.resumeretrycount="-1" )

Coupling with Logstash via Redis

Original post: Recipe: rsyslog + Redis + Logstash by @Sematext

OK, so you want to hook up rsyslog with Logstash. If you don’t remember why you want that, let me give you a few hints:

- Logstash can do lots of things, it’s easy to set up but tends to be too heavy to put on every server

- you have Redis already installed so you can use it as a centralized queue. If you don’t have it yet, it’s worth a try because it’s very light for this kind of workload.

- you have rsyslog on pretty much all your Linux boxes. It’s light and surprisingly capable, so why not make it push to Redis in order to hook it up with Logstash?

In this post, you’ll see how to install and configure the needed components so you can send your local syslog (or tail files with rsyslog) to be buffered in Redis so you can use Logstash to ship them to Elasticsearch, a logging SaaS like Logsene (which exposes the Elasticsearch API for both indexing and searching) so you can search and analyze them with Kibana:

RSyslog Windows Agent 3.1 Released

Adiscon is proud to announce the 3.1 release of RSyslog Windows Agent.

This is a maintenenance release for RSyslog Windows Agent. It includes some bugfixes as well as a new rule date condition which can be used to process events starting from a certain date. A few new options have been added into the Syslog Service as well.

Detailed information can be found in the version history below.

Build-IDs: Service 3.1.0.134, Client 3.1.0.213

Features |

|

Bugfixes |

|

Version 3.1 is a free download. Customers with existing 2.x keys can contact our Sales department for upgrade prices. If you have a valid Upgrade Insurance ID, you can request a free new key by sending your Upgrade Insurance ID to sales@adiscon.com. Please note that the download enables the free 30-day trial version if used without a key – so you can right now go ahead and evaluate it.

Tutorial: Sending impstats Metrics to Elasticsearch Using Rulesets and Queues

Originally posted on the Sematext blog: Monitoring rsyslog’s Performance with impstats and Elasticsearch

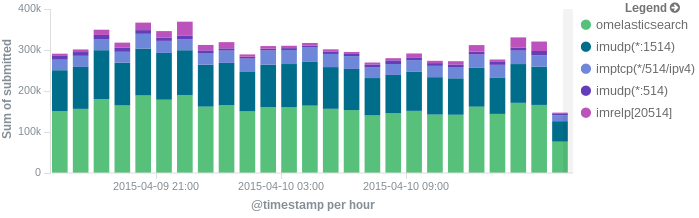

If you’re using rsyslog for processing lots of logs (and, as we’ve shown before, rsyslog is good at processing lots of logs), you’re probably interested in monitoring it. To do that, you can use impstats, which comes from input module for process stats. impstats produces information like:

– input stats, like how many events went through each input

– queue stats, like the maximum size of a queue

– action (output or message modification) stats, like how many events were forwarded by each action

– general stats, like CPU time or memory usage

In this post, we’ll show you how to send those stats to Elasticsearch (or Logsene — essentially hosted ELK, our log analytics service, that exposes the Elasticsearch API), where you can explore them with a nice UI, like Kibana. For example get the number of logs going through each input/output per hour:

More precisely, we’ll look at:

– useful options around impstats

– how to use those stats and what they’re about

– how to ship stats to Elasticsearch/Logsene by using rsyslog’s Elasticsearch output

– how to do this shipping in a fast and reliable way. This will apply to most rsyslog use-cases, not only impstats

Continue reading “Tutorial: Sending impstats Metrics to Elasticsearch Using Rulesets and Queues”

RSyslog Windows Agent 3.0 Released

Adiscon is proud to announce the 3.0 release of RSyslog Windows Agent.

This new major release adds full support for Windows 2012 R2 and also has been verified to work on Windows 10 preview versions.

The new major version is a milestone in many ways. Most important the performance of the core engine has been considerably increased. All existing configurations will benefit from this. Also a new Configuration Client has been added which has been rewritten using the .Net Framework (Like the InterActive Syslog Viewer). With the new Configuration Client, we also introduce support for a new file based configuration format (as an alternative to the registry-based method). RSyslog Windows Agent can now run from a configuration file and save it state values

into files.

We also extended the classic EventLog Monitor to support multiple dynamic *.evt files for NetApp customers.

Detailed information can be found in the version history below.

Build-IDs: Service 3.0.130, Client 3.0.201

Features |

|

Bugfixes |

|

Version 3.0 is a free download. Customers with existing 2.x keys can contact our Sales department for upgrade prices. If you have a valid Upgrade Insurance ID, you can request a free new key by sending your Upgrade Insurance ID to sales@adiscon.com. Please note that the download enables the free 30-day trial version if used without a key – so you can right now go ahead and evaluate it.

Daily Stable Build

As of our policy, the git master branch is kept stable. In other words: when it comes time to craft a scheduled stable release (every 6 weeks), we grab the master branch as-is and use it to build the release. We currently provide daily stable versions for Ubuntu only (helping hands for other platforms would be appreciated). To obtain them visit

Ubuntu repository installation instructions

Note: you must replace “v8-stable” in these instructions with “v8-devel”. The name “v8-devel” is used for historic reasons. It will probably be changed soon to better reflect that this actually is stable code.

Using the stable daily build has some advantages over the scheduled one:

- you receive the latest bug fixes, including those for known issues in the scheduled stable release

- you get hold of the latest feature updates; note that some experimental features not fully matured may be included (this also happens, a bit later, with the scheduled stable release)

- a real plus over the scheduled release is that if we actually introduced a bug, it will possibly much quicker be fixed compared to the same bug when it made its way into the scheduled release.

You do not need to be concerned that you break configurations by using the daily stable. As of our policies, we never make changes that break existing configs. The only exception from this rule is if there is an extremely good reason for doing so. If so, the same breaking change will be propagated at most 6 weeks later into the scheduled stable release.

In short: there are many good reasons to use the daily stable builds. Please do so. The community fully supports them.

Version Numbering

Daily stable build version numbering is a bit different than usual. Daily builds will identify themselves like this: rsyslogd 8.1907.0.35e7f12a2c04. We simply add the 12 digit commit hash to the version string used for the scheduled version. Also, daily build are always one version ahead of the scheduled version – because that is what they will later be known as.

rsyslog -devel packages are being removed soon

If you use rsyslog’s devel packages on your system, you will receive errors soon. Be sure to read the complete posting to avoid trouble!

As part of rsyslog’s new release schedule and version naming, devel releases will no longer be named according to the “normal” numbering scheme. This also means that the previous “devel” branches will disappear, as git master branch now is the always-current devel version.

Keep on your mind that we previously had a release cycle of 3 to 9 month for a new feature to appear in a stable version. That was because new feature releases were only done when a complete devel turnaround was done, and relatively many new features were added. For this reason, some people opted to run devel versions in production, and thus needed specific tarballs (and packages) for them.

With the new six week release cycle, we get new features rather quickly into the stable builds. So it usually should be no problem to wait for the next stable to use that recently-implemented new feature. As such, there is no need any longer for special devel releases, and thus no need for devel tarballs and packages.

Well… almost. One thing we would like to have is a “daily devel version”. The idea is that if the testbench runs are OK, a new tarball and a set of packages is generated automatically and posted to a special archive. In general, that archive should receive an update once a day. So people really interested in the [b]leading edge can simply install from that daily package archive — and report bugs quickly, so helping the development process. Unfortunately, time is precious and we don’t know when and if we can setup the required automation. Most probably not before January 2015, and how it works out then needs to be seen.

In the interim, we will begin to delete the -devel packages. The old -devel tarballs will remain available, at least for the time being. The problem with -devel packages is that folks may have set their system to use the -devel repro. If we would just keep it as is, those systems would never again receive any updates, neither security-releated nor others, simply because -devel versions no longer exist in the way they were. That would pose a potentially big security risk. As such, we will delete the -devel content, and begin to do so early next week. If you use the -devel packages, be sure to switch the v8-stable instead.

Ubuntu Repository legacy

The Adiscon Ubuntu Repository has been setup to provide support for the latest rsyslog versions on Ubuntu including support for necessary third party packages. Please note that the Ubuntu Repository is open for testing at the moment, and contains the latest RSyslog version. Currently supported Ubuntu Versions:

- Ubuntu 14.04 (Trusty)

- Ubuntu 13.10 (Saucy Salamander)

- Ubuntu 12.04 (Precise)

Ubuntu 12.10 (Quantal) (Not Updated anymore)

Currently supported RSyslog Branches:

- v8-devel

- v8-stable

v7-devel (Not Updated anymore)- v7-stable

The new packages are based in the original and latest Ubuntu 12 rsyslog packages, so in most cases an simple sudo apt-get update && sudo apt-get upgrade will be enough to update rsyslog. Please note that these packages are currently experimental. Use at your own risk.

Add our PPA Repository using the classic method:

- Install our PGP Key into your apt system

apt-key adv --recv-keys --keyserver keyserver.ubuntu.com AEF0CF8E gpg --export --armor AEF0CF8E | sudo apt-key add -

- Edit your /etc/apt/sources.list and add these lines to the end replace v8-devel with v7-stable or v8-stable if you want to use the RSYSLOG stable packages.

For Ubuntu (12.04 LTS) Precise # Adiscon repository deb http://ppa.launchpad.net/adiscon/v8-devel/ubuntu precise main deb-src http://ppa.launchpad.net/adiscon/v8-devel/ubuntu precise main

For Ubuntu (13.10) Saucy # Adiscon repository deb http://ppa.launchpad.net/adiscon/v8-devel/ubuntu saucy main deb-src http://ppa.launchpad.net/adiscon/v8-devel/ubuntu saucy main

- Once done perform these commands to update your apt cache and install the latest RSYSLOG version

sudo apt-get update && sudo apt-get upgrade

- If you receive a message like this while upgrading follow these steps below:

The following packages have been kept back: rsyslog 0 upgraded, 0 newly installed, 0 to remove and 1 not upgraded.sudo apt-get install rsyslog

We highly appriciate any feedback or bug reports.

Performance Tuning&Tests for the Elasticsearch Output

Original post: Rsyslog 8.1 Elasticsearch Output Performance by @Sematext

Version 8 brings major changes in rsyslog’s core – see Rainer’s presentation about it for more details. Those changes should give outputs better performance, and the Elasticsearch one should benefit a lot. Since we’re using rsyslog and Elasticsearch in Sematext‘s own log analytics product, Logsene, we had to take the new version for a spin.

The Weapon and the Target

For testing, we used a good-old i3 laptop, with 8GB of RAM. We generated 20 million logs, sent them to rsyslog via TCP and from there to Elasticsearch in the Logstash format, so they can get explored with Kibana. The objective was to stuff as many events per second into Elasticsearch as possible.

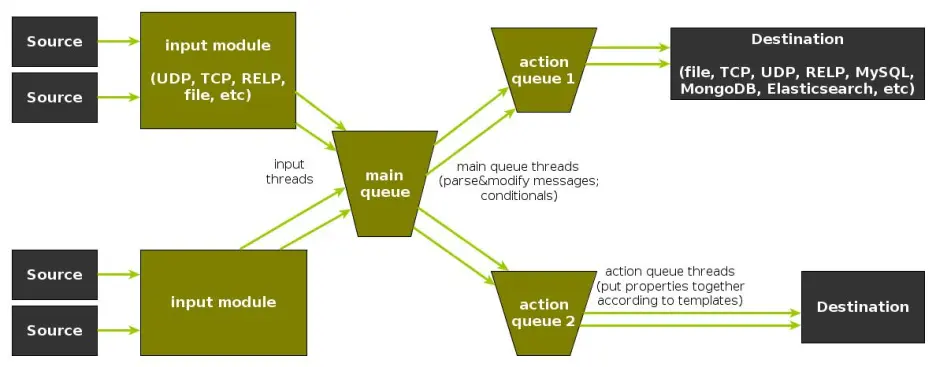

Rsyslog Architecture Overview

In order to tweak rsyslog effectively, one needs to understand its architecture, which is not that obvious (although there’s an ongoing effort to improve the documentation). The gist of it its architecture represented in the figure below.

- you have input modules taking messages (from files, TCP/UDP, journal, etc.) and pushing them to a main queue

- one or more main queue threads take those events and parse them. By default, they parse syslog formats (RFC-3164, RFC-5424 and various derivatives), but you can configure rsyslog to use message modifier modules to do additional parsing (e.g. CEE-formatted JSON messages). Either way, this parsing generates structured events, made out of properties

- after parsing, the main queue threads push events to the action queue. Or queues, if there are multiple actions and you want to fan-out

- for each defined action, one or more action queue threads takes properties from events according to templates, and makes messages that would be sent to the destination. In Elasticsearch’s case, a template should make Elasticsearch JSON documents, and the destination would be the REST API endpoint

There are two more things to say about rsyslog’s architecture before we move on to the actual test:

- you can have multiple independent flows (like the one in the figure above) in the same rsyslog process by using rulesets. Think of rulesets as swim-lanes. They’re useful for example when you want to process local logs and remote logs in a completely separate manner

- queues can be in-memory, on disk, or a combination called disk-assisted. Here, we’ll use in-memory because they’re the fastest. For more information about how queues work, take a look here

Configuration

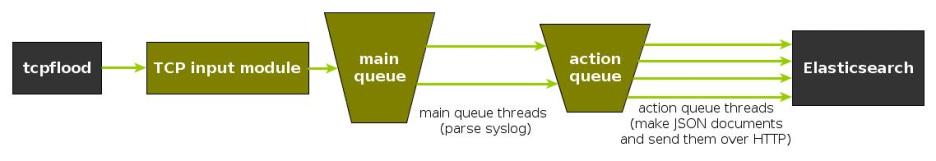

To generate messages, we used tcpflood, a small and light tool that’s part of rsyslog’s testbench. It generates messages and sends them over to the local syslog via TCP.

Rsyslog took received those messages with the imtcp input module, queued them and forwarded them to Elasticsearch 0.90.7, which was also installed locally. We also tried with Elasticsearch 1.0 and got the same results (see below).

The flow of messages in this test is represented in the following figure:

The actual rsyslog config is listed below (in the new configuration format). It can be tuned further (for example by using the multithreaded imptcp input module), but we didn’t get significant improvements in this particular scenario.

module(load="imtcp") # TCP input module module(load="omelasticsearch") # Elasticsearch output module input(type="imtcp" port="13514") # where to listen for TCP messages main_queue( queue.size="1000000" # capacity of the main queue queue.dequeuebatchsize="1000" # process messages in batches of 1000 and move them to the action queues queue.workerthreads="2" # 2 threads for the main queue ) # template to generate JSON documents for Elasticsearch in Logstash format template(name="plain-syslog" type="list") { constant(value="{") constant(value="\"@timestamp\":\"") property(name="timereported" dateFormat="rfc3339") constant(value="\",\"host\":\"") property(name="hostname") constant(value="\",\"severity\":\"") property(name="syslogseverity-text") constant(value="\",\"facility\":\"") property(name="syslogfacility-text") constant(value="\",\"syslogtag\":\"") property(name="syslogtag" format="json") constant(value="\",\"message\":\"") property(name="msg" format="json") constant(value="\"}") } action(type="omelasticsearch" template="plain-syslog" # use the template defined earlier searchIndex="test-index" bulkmode="on" # use the Bulk API queue.dequeuebatchsize="5000" # ES bulk size queue.size="100000" # capacity of the action queue queue.workerthreads="5" # 5 workers for the action action.resumeretrycount="-1" # retry indefinitely if ES is unreachable )

You can see from the configuration that:

- both main and action queues have a defined size in number of messages

- both have number of threads that deliver messages to the next step. The action needs more because it has to wait for Elasticsearch to reply

- moving of messages from the queues happens in batches. For the Elasticsearch output, the batch of messages is sent through the Bulk API, which makes queue.dequeuebatchsize effectively the bulk size

Results

We started with default Elasticsearch settings. Then we tuned them to leave rsyslog with a more significant slice of the CPU. We monitored the indexing rate with SPM for Elasticsearch. Here are the average results over 20 million indexed events:

- with default Elasticsearch settings, we got 8,000 events per second

- after setting Elasticsearch up more production-like (5 second refresh interval, increased index buffer size, translog thresholds, etc), and the throughput went up to average of 20,000 events per second

- in the end, we went berserk and used in-memory indices, updated the mapping to disable any storing or indexing for any field, to have Elasticsearch do as little work as possible and make room for rsyslog. Got an average of 30,000 events per second. In this scenario, rsyslog was using between 1 and 1.5 of the 4 virtual CPU cores, with tcpflood using 0.5 and Elasticsearch using from 2 to 2.5

Conclusion

20K EPS on a low-end machine with production-like configuration means Elasticsearch is quick at indexing. This is very good for logs, where you typically have lots of messages being generated, compared to how often you search.

If you need some tool to ship your logs to Elasticsearch with minimum overhead, rsyslog version 8 may well be your best bet.

Related posts:

librelp 1.2.4

librelp 1.2.4 [download]

This version of librelp is a correction for the API/ABI change in v1.2.3. Everything else stays the same.

Version 1.2.4 – 2014-03-17

– correct API/ABI change in 1.2.3

My reasoning was flawed, and we could run into problems with

apps that required the new version but could not detect that an

older one was installed.

Thanks to Michael Biebl for pointing this out.

What we have done is:

– revert back to previous state (return void)

* relpSrvEnableTLS();

* relpSrvEnableTLSZip();

These functions are now deprecated.

– introduce new functions that return a state

* relpSrvEnableTLS2();

* relpSrvEnableTLSZip2();

sha256sum: cf4f26f9a75991eedf3eaf414280c8da3532c38e619a465d23008c714f5c1cf1