The rocket-fast system for log processing pipelines

rsyslog helps you collect, transform, and route event data reliably at scale. Built for speed, flexibility, and control in modern Linux and container environments.

Runs great on single hosts and in containerized deployments.

Trusted by organizations worldwide

1M+

Messages per second

100+

Input/output modules

20+

Years in production

📦 Current versions

Download the latest stable release, daily builds, or explore containerized deployments. All versions include documentation and release notes.

🪟 Windows Agent: 8.1 [download]

Get started in 60 seconds

Two quick ways to try rsyslog.

# Debian/Ubuntu

sudo apt-get update

sudo apt-get install -y rsyslog

sudo systemctl enable --now rsyslog

# Config lives in /etc/rsyslog.conf and /etc/rsyslog.d/# Docker (example)

docker run --name rsyslog/rsyslog -d \

-v $(pwd)/rsyslog.conf:/etc/rsyslog.conf:ro \

-p 514:514/tcp -p 514:514/udp \

rsyslog/rsyslogSee First steps guide and Basic configuration reference for more detail.

What is rsyslog?

rsyslog is an open-source, high-performance engine for collecting, transforming and routing event data. It ingests from diverse sources (files, journals, syslog, Kafka), applies parsing, enrichment and filtering rules via RainerScript and modules like mmnormalize, buffers safely with disk-assisted queues, and forwards to Elasticsearch, Kafka, HTTP endpoints or files. With over 20 years of proven reliability, rsyslog bridges classic syslog-style logging and modern data pipelines — now guided by an AI-First (human-controlled) vision for smarter observability.

Why operators rely on rsyslog

💾 Reliable delivery

Disk-assisted queues and backpressure controls keep pipelines flowing.

📎 Flexible parsing

Support for regex, structured formats, JSON, and liblognorm pipelines.

📦 Powerful routing

Conditional rules and reusable templates with RainerScript.

📭 Broad outputs

Files, TCP/UDP/TLS syslog, Kafka, HTTP, and database destinations.

📰 Performance at scale

Multi-threaded design with tuning controls for predictable latency.

🌍 Runs anywhere

Bare metal, virtual machines, and containerized environments.

Works with your observability stack

| Target | Description / Docs link |

|---|---|

| Elastic / OpenSearch | output-elasticsearch module guide |

| Grafana Loki | HTTP/JSON shipping example |

| Kafka | omkafka documentation |

| Splunk HEC | omhttp configuration example |

| Files & rotation | omfile output reference |

| Databases | Output modules overview |

Integrates via open protocols (syslog, TCP/TLS, HTTP, Kafka). No cloud-vendor lock-in.

💼 Professional services for production workloads

Need expert help to ship faster and reduce risk? Our team provides architecture reviews, performance tuning, migrations, troubleshooting, and long-term support—tailored to your stack.

- ✅ Architecture & performance reviews

- ✅ Production readiness, HA & DR patterns

- ✅ Migrations (e.g., from Kiwi, Logstash)

- ✅ Custom modules and integrations

- ✅ Incident response and troubleshooting

- ✅ SLAs and long-term support options

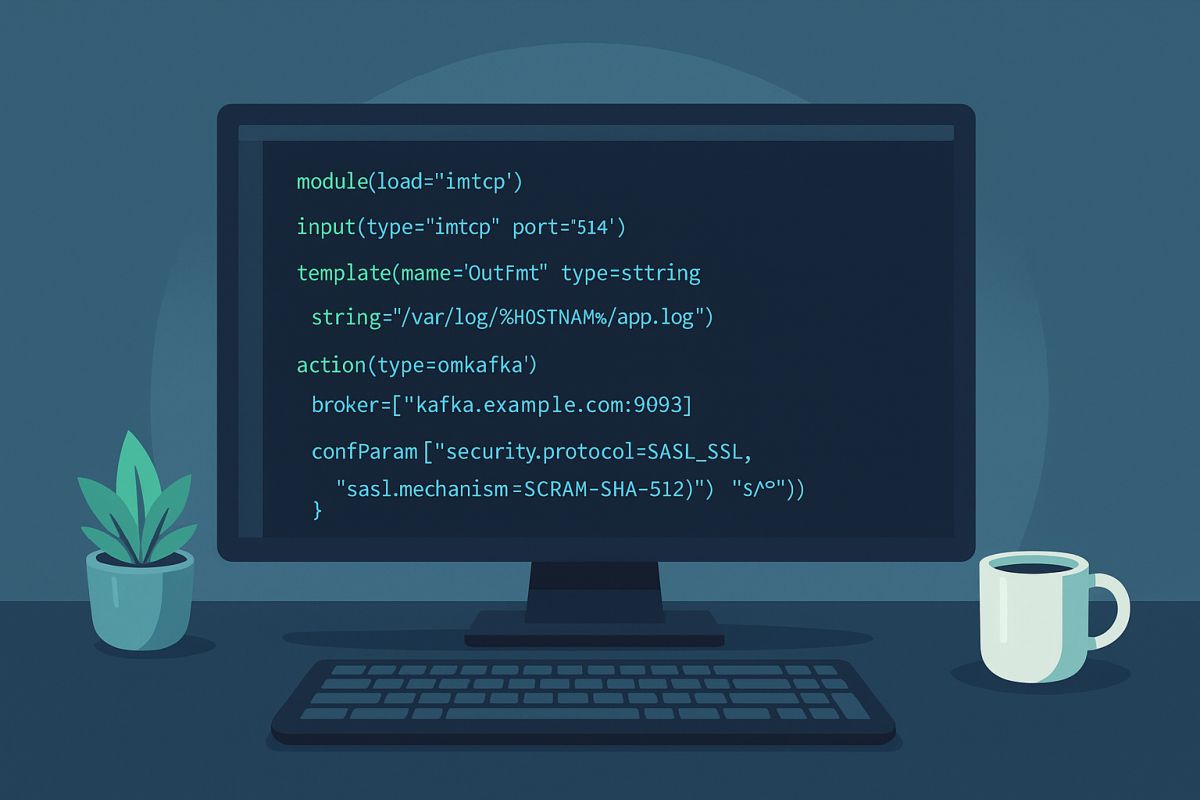

💻 Two tiny examples

Example A (RainerScript)

module(load="imuxsock")

module(load="imklog")

template(name="jsonl" type="list") {

constant(value="{\"ts\":\"") property(name="timereported" dateFormat="rfc3339")

constant(value="\",\"host\":\"") property(name="hostname")

constant(value="\",\"msg\":\"") property(name="msg" format="json")

constant(value="\"}\n")

}

*.* action(type="omfile" file="/var/log/events.jsonl" template="jsonl")Example B (RainerScript)

module(load="imuxsock")

module(load="omkafka")

if ($programname == "sshd") then {

action(type="omkafka"

broker=["kafka:9092"]

topic="security-auth"

template="RSYSLOG_TraditionalFileFormat")

}🤖 Self-support with the rsyslog Assistant

The rsyslog Assistant is an AI-powered self-support tool based on curated, verified project knowledge, supervised by maintainers. Use it to explore configuration options, examples, and troubleshooting tips.

📢 Latest from the project

New: production-ready observability stack integrated into rsyslog

Rsyslog now includes an officially maintained, production-oriented observability stack for centralized logging and monitoring. The stack is designed as a…

The rsyslog 2025 Year in Review

Evolving Proven Infrastructure for a New Era The year 2025 was a defining year for rsyslog. Not because of a…

Season’s Greetings from the rsyslog Project

As the year comes to a close, we would like to send our warm thanks to everyone who makes the…