rsyslog 8.9.0 (v8-stable) released

We have released rsyslog 8.9.0.

http://www.rsyslog.com/changelog-for-8-9-0-v8-stable/

Download:

http://www.rsyslog.com/downloads/download-v8-stable/

As always, feedback is appreciated.

Best regards,

Florian Riedl

Changelog for 8.9.0 (v8-stable)

Version 8.9.0 [v8-stable] 2015-04-07

- omprog: add option “hup.forward” to forwards HUP to external plugins

This was suggested by David Lang so that external plugins (and other

programs) can also do HUP-specific processing. The default is not

to forward HUP, so no change of behavior by default. - imuxsock: added capability to use regular parser chain

Previously, this was a fixed format, that was known to be spoken on

the system log socket. This also adds new parameters:- sysSock.useSpecialParser module parameter

- sysSock.parseHostname module parameter

- useSpecialParser input parameter

- parseHostname input parameter

- 0mq: improvements in input and output modules

See module READMEs, part is to be considered experimental.

Thanks to Brian Knox for the contribution. - imtcp: add support for ip based bind for imtcp -> param “address”

Thanks to github user crackytsi for the patch. - bugfix: MsgDeserialize out of sync with MsgSerialize for StrucData

This lead to failure of disk queue processing when structured data was

present. Thanks to github user adrush for the fix. - bugfix imfile: partial data loss, especially in readMode != 0

closes https://github.com/rsyslog/rsyslog/issues/144 - bugfix: potential large memory consumption with failed actions

see also https://github.com/rsyslog/rsyslog/issues/253 - bugfix: omudpspoof: invalid default send template in RainerScript format

The file format template was used, which obviously does not work for

forwarding. Thanks to Christopher Racky for alerting us.

closes https://github.com/rsyslog/rsyslog/issues/268 - bugfix: size-based legacy config statements did not work properly

on some platforms, they were incorrectly handled, resulting in all

sorts of “interesting” effects (up to segfault on startup) - build system: added option –without-valgrind-testbench

… which provides the capability to either enforce or turn off

valgrind use inside the testbench. Thanks to whissi for the patch. - rsyslogd: fix misleading typos in error messages

Thanks to Ansgar Püster for the fixes.

Using rsyslog and Elasticsearch to Handle Different Types of JSON Logs

Originally posted on the Sematext blog: Using Elasticsearch Mapping Types to Handle Different JSON Logs

By default, Elasticsearch does a good job of figuring the type of data in each field of your logs. But if you like your logs structured like we do, you probably want more control over how they’re indexed: is time_elapsed an integer or a float? Do you want your tags analyzed so you can search for big in big data? Or do you need it not_analyzed, so you can show top tags via the terms aggregation? Or maybe both?

In this post, we’ll look at how to use index templates to manage multiple types of logs across multiple indices. Also, we’ll explain how to use rsyslog to handle JSON logging and specify types.

Elasticsearch Mapping and Logs

To control settings for how a field is analyzed in Elasticsearch, you’ll need to define a mapping. This works similarly in Logsene, our log analytics SaaS, because it uses Elasticsearch and exposes its API.

With logs you’ll probably use time-based indices, because they scale better (in Logsene, for instance, you get daily indices). To make sure the mapping you define today applies to the index you create tomorrow, you need to define it in an index template.

Managing Multiple Types

Mappings provide a nice abstraction when you have to deal with multiple types of structured data. Let’s say you have two apps generating logs of different structures: both have a timestamp field, but one recording logins has a user field, and another one recording purchases has an amount field.

To deal with this, you can define the timestamp field in the _default_ mapping which applies to all types. Then, in each type’s own mapping we’ll define fields unique to that mapping. The following snippet is an example that works with Logsene, provided that aaaaaaaa-bbbb-cccc-dddd-eeeeeeeeeeee is your Logsene app token. If you roll your own Elasticsearch, you can use whichever name you want, and make sure the template name applies to matches index pattern (for example, logs-* will work if your indices are in the logs-YYYY-MM-dd format).

curl -XPUT 'logsene-receiver.sematext.com/_template/aaaaaaaa-bbbb-cccc-dddd-eeeeeeeeeeee_MyTemplate' -d '{

"template" : "aaaaaaaa-bbbb-cccc-dddd-eeeeeeeeeeee*",

"order" : 21,

"mappings" : {

"_default_" : {

"properties" : {

"timestamp" : { "type" : "date" }

}

},

"firstapp" : {

"properties" : {

"user" : { "type" : "string" }

}

},

"secondapp" : {

"properties" : {

"amount" : { "type" : "long" }

}

}

}

}'Sending JSON Logs to Specific Types

When you send a document to Elasticsearch by using the API, you have to provide an index and a type. You can use an Elasticsearch client for your preferred language to log directly to Elasticsearch or Logsene this way. But I wouldn’t recommend this, because then you’d have to manage things like buffering if the destination is unreachable.

Instead, I’d keep my logging simple and use a specialized logging tool, such as rsyslog, to do the hard work for me. Logging to a file is usually the easiest option. It’s local, and you can have your logging tool tail the file and send contents over the network. I usually prefer sockets (like syslog) because they let me configure rsyslog to:

– write events in a human format to a local file I can tail if I need to (usually in development)

– forward logs without hitting disk if I need to (usually in production)

Whatever you prefer, I think writing to local files or sockets is better than sending logs over the network from your application. Unless you’re willing to do a reliability trade-off and use UDP, which gets rid of most complexities.

Opinions aside, if you want to send JSON over syslog, there’s the JSON-over-syslog (CEE) format that we detailed in a previous post. You can use rsyslog’s JSON parser module to take your structured logs and forward them to Logsene:

module(load="imuxsock") # can listen to local syslog socket

module(load="omelasticsearch") # can forward to Elasticsearch

module(load="mmjsonparse") # can parse JSON

action(type="mmjsonparse") # parse CEE-formatted messages

template(name="syslog-cee" type="list") { # Elasticsearch documents will contain

property(name="$!all-json") # all JSON fields that were parsed

}

action(

type="omelasticsearch"

template="syslog-cee" # use the template defined earlier

server="logsene-receiver.sematext.com"

serverport="80"

searchType="syslogapp"

searchIndex="LOGSENE-APP-TOKEN-GOES-HERE"

bulkmode="on" # send logs in batches

queue.dequeuebatchsize="1000" # of up to 1000

action.resumeretrycount="-1" # retry indefinitely (buffer) if destination is unreachable

)To send a CEE-formatted syslog, you can run logger ‘@cee: {“amount”: 50}’ for example. Rsyslog would forward this JSON to Elasticsearch or Logsene via HTTP. Note that Logsene also supports CEE-formatted JSON over syslog out of the box if you want to use a syslog protocol instead of the Elasticsearch API.

Filtering by Type

Once your logs are in, you can filter them by type (via the _type field) in Kibana:

However, if you want more refined filtering by source, we suggest using a separate field for storing the application name. This can be useful when you have different applications using the same logging format. For example, both crond and postfix use plain syslog.

rsyslog 8.6.0 (v8-stable) released

We have released rsyslog 8.6.0.

This is the first stable release under a new release cycle and versioning scheme. This new scheme is important news in itself. For more details, please have a look here:

http://www.rsyslog.com/rsyslogs-new-release-cycle-and-versioning-scheme/

Version 8.6.0 contains important new features like the ability to monitor files via wildcards in imfile. It also contains new, experimental zero message queue modules (special thanks to team member Brian Knox), improvements to RainerScript and mmnormalize (thanks to Singh Janmejay) and a couple of other improvements.

This release also contains important bug fixes.

This is a recommended upgrade for all users.

http://www.rsyslog.com/changelog-for-8-6-0-v8-stable/

Download:

http://www.rsyslog.com/downloads/download-v8-stable/

As always, feedback is appreciated.

Best regards,

Florian Riedl

Changelog for 8.6.0 (v8-stable)

Version 8.6.0 [v8-stable] 2014-12-02

NOTE: This version also incorporates all changes and enhancements made for

v8.5.0, but in a stable release. For details see immediately below.

- configuration-setting rsyslogd command line options deprecated

For most of them, there are now proper configuration objects. Some few will be completely dropped if nobody insists on them. Additional info at

http://blog.gerhards.net/2014/11/phasing-out-legacy-command- line-options.html - new and enhanced plugins for 0mq. These are currently experimantal.

Thanks to Brian Knox who contributed the modules and is their author. - empty rulesets have been permitted. They no longer raise a syntax error.

- add parameter -N3 to enable config check of partial config file

Use for config include files. Disables checking if any action exists at

all. - rsyslogd -e option has finally been removed

It is deprectated since many years. - testbench improvements

Testbench is now more robust and has additional tests. - testbench is now by default disabled

To enable it, use –enable-testbench. This was done as the testbench now does better checking if required modules are present and this in turn would lead to configure error messages where non previously were if we would leave –enable-testbench on by default. Thus we have turned it off. This should not be an issue for those few testbench users. - add new RainerScript functions warp() and replace()

Thanks to Singh Janmejay for the patch. - mmnormalize can now also work on a variable

Thanks to Singh Janmejay for the patch. - new property date options for day ordinal and week number

Thanks to github user arrjay for the patch - remove –enable-zlib configure option, we always require it

It’s hard to envision a system without zlib, so we turn this off

closes https://github.com/rsyslog/rsyslog/issues/76 - slight source-tree restructuring: contributed modules are now in their own ./contrib directory. The idea is to make it clearer to the end user which plugins are supported by the rsyslog project (those in ./plugins).

- bugfix: imudp makes rsyslog hang on shutdown when more than 1 thread used

closes https://github.com/rsyslog/rsyslog/issues/126 - bugfix: not all files closed on auto-backgrounding startup

This could happen when not running under systemd. Some low-numbered fds were not closed in that case. - bugfix: typo in queue configuration parameter made parameter unusable

Thanks to Bojan Smojver for the patch. - bugfix: unitialized buffer off-by-one error in hostname generation

The DNS cache used uninitialized memory, which could lead to invalid hostname generation.

Thanks to Jarrod Sayers for alerting us and provinding analysis and patch recommendations. - bugfix imuxsock: possible segfault when SysSock.Use=”off”

Thanks to alexjfisher for reporting this issue.

closes https://github.com/rsyslog/rsyslog/issues/140 - bugfix: RainerScript: invalid ruleset names were accepted during ruleset defintion, but could of course not be used when e.g. calling a ruleset.

IMPORTANT: this may cause existing configurations to error out on start, as they invalid names could also be used e.g. when assigning rulesets. - bugfix: some module entry points were not called for all modules callbacks like endCnfLoad() were primarily being called for input modules. This has been corrected. Note that this bugfix has some regression potential.

- bugfix omlibdbi: connection was taken down in wrong thread

This could have consequences depending on the driver being used. In general, it looks more like a cosmetic issue. For example, with MySQL it lead to a small memory but also an annoying message about a thread not properly torn down. - imttcp was removed because it was an incompleted experimental module

- pmrfc3164sd because it was a custom module nobody used

We used to keep this as a sample inside the tree, but whoever wants to look at it can check in older versions inside git - omoracle was removed because it was orphaned and did not build/work for quite some years and nobody was interested in fixing it

remote syslog PRI vulnerability – CVE: CVE-2014-3683

remote syslog PRI vulnerability

===============================

CVE: CVE-2014-3683

Status of this report

———————

FINAL

Updated 2014-10-06: effect on sysklogd milder than in initial assesment

Reporter

——-

mancha

Rainer Gerhards

Affected

——–

– rsyslog, most probably all versions (checked v3-stable and above)

– sysklogd (checked most recent versions)

Short Description

—————–

While preparing a fix for CVE-2014-3634 for sysklogd, mancha discovered and

privately reported that the initial rsyslog fix set was incomplete. It did

not cover cases where PRI values > MAX_INT caused integer overflows

resulting in negative values.

Root Cause

———-

A while parsing the syslog message’s PRI value, an integer overlow can

happen (for PRI > MAX_INT), which may cause negative PRI values. The

solutions provided in CVE-2014-3634 do not handle that case.

Effect in Practice

——————

General

~~~~~~~

Almost all distributions do ship rsyslog without remote reception by

default and almost all distros also put firewall rules into place that

prevent reception of syslog messages from remote hosts (even if rsyslog

would be listening). With these defaults, it is impossible to trigger

the vulnerability in v7 and v8. Older versions may still be vulnerable

if a malicious user writes to the local log socket.

Even when configured to receive remote message (on a central server),

it is good practice to protect such syslog servers to accept only

messages from trusted peers, e.g. within the relay chain. If setup in

such a way, a trusted peer must be compromised to send malfromed

messages. This further limits the magnitude of the vulnerability.

If, however, any system is permitted to send data unconditionally to

the syslogd, a remote attack is possible.

sysklogd

~~~~~~~~

This causes no extra problems as CVE-2014-3634 because negative

PRI values are masked off, so the overflow region is still at a

maximum of 104 bytes. For more detailsm see CVE-2014-3634.

rsyslogd

~~~~~~~~

Rsyslogd experiences the same problem as sysklogd.

As in CVE-2014-3634, rsyslog v7 and v8 have an elevated risk due to some

tables lookups used during writing. For details see CVE2014-3634.

Contrary to CVE-2014-3634, rsyslog v5 is also likely to segfault, if

and only if the patch for CVE-2014-3634 is applied.

Note that a segfault of rsyslog can cause message loss. There are multiple

scenarios for this, but likely ones are:

– reception via UDP, where all messages arriving during downtime are lost

– corruption of disk queue structures, which can lead to loss of all disk

queue contents (manual recovery is possible).

This list does not try to be complete. Note that disk queue corruption is likely

to occur in default settings, because the important queue information file (.qi)

is only written on successful shutdown. Without a valid .qi file, queue message

files cannot be processed.

How to Exploit

————–

A syslog message with a PRI > MAX_INT needs to be sent and the resulting

overflow must lead to a negative value. It is usually sufficient

to send just the PRI as in this example

“<3500000000>”

Severity

——–

Given the fact that multiple changes must be made to default system configurations

and potential problems we classify the severity of this vulnerability as

MEDIUM

Note that the probability of a successful attack is on LOW. However, the risk of

message loss is HIGH in those rare instances where an attack is successful. As

mentioned above, it cannot totally be outruled that remote code injection is

possible using this vulnerability.

Patches

——-

sysklogd

~~~~~~~~

A patch for version 1.5 is available at:

http://sf.net/projects/mancha/files/sec/sysklogd-1.5_CVE-2014-3634.diff

rsyslog

~~~~~~~

Patches are available for versions known to be in wide-spread use.

Version 8.4.2 is not vulnerable. Version 7.6.7, while no longer being project

supported, received a patch and is also not vulnerable.

Versions 8.4.1 and 7.6.6 do NOT handle integer overflows and resulting negative

PRI values correctly. So upgrading to them is NOT a sufficient solution. All

older v7 and v8 versions are vulnerable.

The rsyslog project also provides patches for older versions 5 and 3. This is

purely a convenience to those that still run these very outdated versions.

Note that these patches address the segfault issue. They do NOT offer all features

of the v7/8 series, as this would require considerate code changes. Most

importantly, the “invld” facility is not available in the v3 patch. Also,

the dead-version patches do not try to assing a specific severity to messages

with invalid PRI values nor do they prevent parsing those messages. In general,

it is suggested to upgrade to the currently supported version 8.4.2.

All patches and downloads can be found on http://www.rsyslog.com

Special thanks to mancha for his suggestions on how to fix the problem. The

core idea went into the rsyslog patches.

remote syslog PRI vulnerability – CVE: CVE-2014-3634

===============================

CVE: CVE-2014-3634

Status of this report

———————

FINAL

Reporter

——-

Rainer Gerhards, rsyslog project lead

Affected

——–

– rsyslog, most probably all versions (checked 5.8.6+)

– sysklogd (checked most recent versions)

– potentially others (see root cause)

Root Cause

———-

Note: rsyslogd was forked from sysklogd, and the root cause applies to

both. For simplicity, here I use sysklogd as this is the base code.

The system header file /usr/include/*/syslog.h contains the following definitions

#define LOG_NFACILITIES 24 /* current number of facilities */

#define LOG_FACMASK 0x03f8 /* mask to extract facility part */

/* facility of pri */

#define LOG_FAC(p) (((p) & LOG_FACMASK) >> 3)

[This is from Ubuntu 12.04LTS, but can be found similarly in most, if

not all, distributions].

The define LOG_NFACILITIES is used by sysklogd to size arrays for facility

processing. In sysklogd, an array for selector matching is using this. Rsyslog

has additional array. The macro LOG_FAC() is used to extract the facility from

a syslog PRI [RFC3164, RFC 5424]. Its result is used to address the arrays.

Unfortunately, the LOG_FACMASK permits PRI values up to 0x3f8 (1016 dec). This

translates to 128 facilities. Consequently, for PRI values above 191 the

LOG_NFACILITIES arrays are overrun.

Other applications may have similar problems, as LOG_NFACILITES “sounds” like

the max value that LOG_FAC() can return. It would probably make sense to

check why there is a difference between LOG_NFACILITES and LOG_FACMASK, and

if this really needs to stay. A proper fix would probably be to make LOG_FAC

return a valid (maybe special) facility if an invalid one is provided. This

is the route taken in rsyslog patches.

Effect in Practice

——————

General

~~~~~~~

Almost all distributions do ship rsyslog without remote reception by

default and almost all distros also put firewall rules into place that

prevent reception of syslog messages from remote hosts (even if rsyslog

would be listening). With these defaults, it is impossible to trigger

the vulnerability in v7 and v8. Older versions may still be vulnerable

if a malicious user writes to the local log socket.

Even when configured to receive remote message (on a central server),

it is good practice to protect such syslog servers to accept only

messages from trusted peers, e.g. within the relay chain. If setup in

such a way, a trusted peer must be compromised to send malfromed

messages. This further limits the magnitude of the vulnerability.

If, however, any system is permitted to send data unconditionally to

the syslogd, a remote attack is possible.

sysklogd

~~~~~~~~

Sysklogd is mildly affected. Having a quick look at the current git master

branch, the wrong action may be applied to messages with invalid facility.

A segfault seems unlikely, as the maximum misadressing is 104 bytes of the

f_pmask table, which is always within properly allocated memory (albeit to

wrong data items). This can lead to triggering invalid selector lines and

thus wrongly writing to files or wrongly forwarding to other hosts.

rsyslogd

~~~~~~~~

Rsyslogd experiences the same problem as sysklogd.

However, more severe effects can occur, BUT NOT WITH THE DEFAULT CONFIGURATION.

The most likely and thus important attack is a remote DoS. Some

of the additional tables are writable and can cause considerable misadressing.

This is especially true for versions 7 and 8. In those versions, remote code

injection may also be possible by a carefully crafted package. It sounds hard

to do, but it cannot be totally outruled [we did not check this in depth].

A segfault (and thus Dos) has the following preconditions:

– the rsyslog property “pri-text” must be used, either in

* templates

* conditional statements (RainerScript and property-based filters)

– the property must actually be accessed

With traditional selector lines, this depends on the facility causing

a misadressing that leads to reading a 1 from the misaccessed location.

When the preconditions are met, misadressing happens. The code uses a string

table and a table of string lengths. Depending on memory layout at time of

misadressing and depending on the actual invalid PRI value, the lookup to

the string table can lead to a much to long length, which is the used in

buffer copy calculations. High PRI values close to the max of 1016 potentially

cause most problems, but we have also seen segfaults with very low invalid

PRI values.

Note that, as usual in such situations, a segfault may not happen immediately.

Instad some data structures may be damaged (e.g. from the memory allocator)

which will later on result in a segfault.

In v5 and below, a segfault is very unlikely, as snprintf() is used to generate

the pri-text property. As such, no write overrun can happen (but still garbagge

be contained inside the property). A segfault could theoretically happen if the

name lookup table indices cause out-of-process misadressing. We could not manage

to produce a segfault with v5.

Versions 7.6.3 and 7.6.4 already have partial fixes for the issue and will not

be vulnerable to a segfault (but the mild other issues described).

All other versions 7 and 8 are vulnerable. Version 6 was not checked as it seems

no longer be used in practice (it was an interim version). No patch for version 6

will be provided.

Note that a segfault of rsyslog can cause message loss. There are multiple

scenarios for this, but likely ones are:

– reception via UDP, where all messages arriving during downtime are lost

– corruption of disk queue structures, which can lead to loss of all disk

queue contents (manual recovery is possible).

This list does not try to be complete. Note that disk queue corruption is likely

to occur in default settings, because the important queue information file (.qi)

is only written on successful shutdown. Without a valid .qi file, queue message

files cannot be processed.

How to Exploit

————–

A syslog message with an invalid PRI value needs to be sent. It is sufficient

to send just the PRI as in this example

“<201>”

Any message starting with “<PRI>” where PRI is an integer greater than 191

can trigger the problem. The maximum offset that can be generated is with

PRI equal to 1016, as this is the modulus used due to LOG_FACMASK.

Note that messages with

– PRI > 191 and

– PRI modulus 1016 <= 191

will not lead to misadressing but go into the wrong bin.

Messsages with

– PRI > 191

– PRI modulus 1016 > 191

will go into the wrong bin and lead to misadressing.

Severity

——–

Given the triggering scenarios, the fact that multiple changes must be made to

default system configurations and potential problems we classify the severity

of this vulnerability as

MEDIUM

Note that the probability of a successful attack is LOW. However, the risk of

message loss is HIGH in those rare instances where an attack is successful. As

mentioned above, it cannot totally be outruled that remote code injection is

possible using this vulnerability.

This vulnerability is at least not publicly know. Based on (no) bug reports, it

seems unlikely that it is being exploited, but that’s obviously hard to know for

sure.

Patches

——-

Patches are available for versions known to be in wide-spread use.

Version 8.4.1 is not vulnerable. Version 7.6.6, while no longer being project

supported received a patch and is also not vulnerable.

New patches for the article available http://www.rsyslog.com/remote-syslog-pri-vulnerability-cve-2014-3683/

Changelog for 8.2.0 (v8-stable)

Version 8.2.0 [v8-stable] 2014-04-02

This starts a new stable branch based on 8.1.6 plus the following changes:

- we now use doc from the rsyslog-doc project

As such, the ./doc subtree has been removed. Instead, a cache of the rsyslog-doc project’s files has been included in ./rsyslog-doc.tar.gz. Note that the exact distribution mode for the doc is still under discussion and may change in future releases. This was agreed upon on the rsyslog mailing list. For doc issues and corrections, be sure to work with the rsyslog-doc project. It is currently hosted at https://github.com/rsyslog/rsyslog-doc - add support for specifying the liblogging-stdlog channel spec

new global parameter “stdlog.channelspec” - add “

defaultnetstreamdrivercertfile” global variable to set a default for the certfile.

Thanks to Radu Gheorghe for the patch. - omelasticsearch: add new “usehttps” parameter for secured connections

Thanks to Radu Gheorghe for the patch. - “action resumed” message now also specifies module type which makes troubleshooting a bit easier. Note that we cannot output all the config details (like destination etc) as this would require much more elaborate code changes, which we at least do not like to do in the stable version.

- add capability to override GnuTLS path in build process

Thanks to Clayton Shotwell for the patch - better and more consistent action naming, action queues now always contain the word “queue” after the action name

- bugfix: ompipe did resume itself even when it was still in error

See: https://github.com/rsyslog/rsyslog/issues/35

Thanks to github user schplat for reporting

Performance Tuning&Tests for the Elasticsearch Output

Original post: Rsyslog 8.1 Elasticsearch Output Performance by @Sematext

Version 8 brings major changes in rsyslog’s core – see Rainer’s presentation about it for more details. Those changes should give outputs better performance, and the Elasticsearch one should benefit a lot. Since we’re using rsyslog and Elasticsearch in Sematext‘s own log analytics product, Logsene, we had to take the new version for a spin.

The Weapon and the Target

For testing, we used a good-old i3 laptop, with 8GB of RAM. We generated 20 million logs, sent them to rsyslog via TCP and from there to Elasticsearch in the Logstash format, so they can get explored with Kibana. The objective was to stuff as many events per second into Elasticsearch as possible.

Rsyslog Architecture Overview

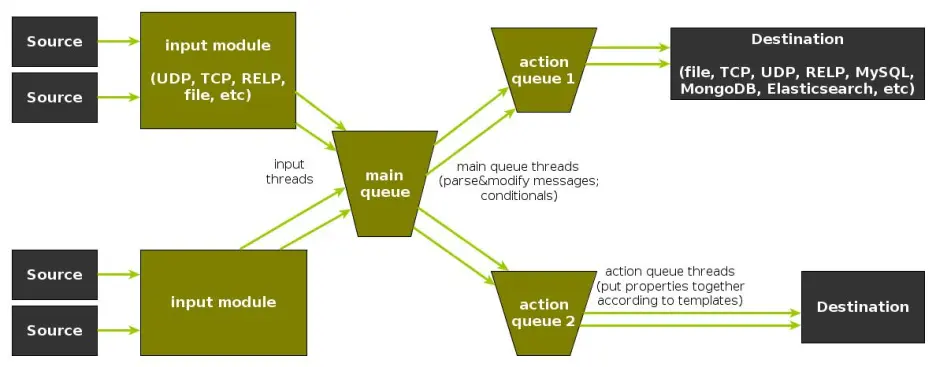

In order to tweak rsyslog effectively, one needs to understand its architecture, which is not that obvious (although there’s an ongoing effort to improve the documentation). The gist of it its architecture represented in the figure below.

- you have input modules taking messages (from files, TCP/UDP, journal, etc.) and pushing them to a main queue

- one or more main queue threads take those events and parse them. By default, they parse syslog formats (RFC-3164, RFC-5424 and various derivatives), but you can configure rsyslog to use message modifier modules to do additional parsing (e.g. CEE-formatted JSON messages). Either way, this parsing generates structured events, made out of properties

- after parsing, the main queue threads push events to the action queue. Or queues, if there are multiple actions and you want to fan-out

- for each defined action, one or more action queue threads takes properties from events according to templates, and makes messages that would be sent to the destination. In Elasticsearch’s case, a template should make Elasticsearch JSON documents, and the destination would be the REST API endpoint

There are two more things to say about rsyslog’s architecture before we move on to the actual test:

- you can have multiple independent flows (like the one in the figure above) in the same rsyslog process by using rulesets. Think of rulesets as swim-lanes. They’re useful for example when you want to process local logs and remote logs in a completely separate manner

- queues can be in-memory, on disk, or a combination called disk-assisted. Here, we’ll use in-memory because they’re the fastest. For more information about how queues work, take a look here

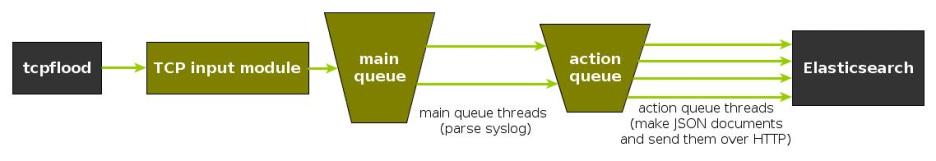

Configuration

To generate messages, we used tcpflood, a small and light tool that’s part of rsyslog’s testbench. It generates messages and sends them over to the local syslog via TCP.

Rsyslog took received those messages with the imtcp input module, queued them and forwarded them to Elasticsearch 0.90.7, which was also installed locally. We also tried with Elasticsearch 1.0 and got the same results (see below).

The flow of messages in this test is represented in the following figure:

The actual rsyslog config is listed below (in the new configuration format). It can be tuned further (for example by using the multithreaded imptcp input module), but we didn’t get significant improvements in this particular scenario.

module(load="imtcp") # TCP input module module(load="omelasticsearch") # Elasticsearch output module input(type="imtcp" port="13514") # where to listen for TCP messages main_queue( queue.size="1000000" # capacity of the main queue queue.dequeuebatchsize="1000" # process messages in batches of 1000 and move them to the action queues queue.workerthreads="2" # 2 threads for the main queue ) # template to generate JSON documents for Elasticsearch in Logstash format template(name="plain-syslog" type="list") { constant(value="{") constant(value="\"@timestamp\":\"") property(name="timereported" dateFormat="rfc3339") constant(value="\",\"host\":\"") property(name="hostname") constant(value="\",\"severity\":\"") property(name="syslogseverity-text") constant(value="\",\"facility\":\"") property(name="syslogfacility-text") constant(value="\",\"syslogtag\":\"") property(name="syslogtag" format="json") constant(value="\",\"message\":\"") property(name="msg" format="json") constant(value="\"}") } action(type="omelasticsearch" template="plain-syslog" # use the template defined earlier searchIndex="test-index" bulkmode="on" # use the Bulk API queue.dequeuebatchsize="5000" # ES bulk size queue.size="100000" # capacity of the action queue queue.workerthreads="5" # 5 workers for the action action.resumeretrycount="-1" # retry indefinitely if ES is unreachable )

You can see from the configuration that:

- both main and action queues have a defined size in number of messages

- both have number of threads that deliver messages to the next step. The action needs more because it has to wait for Elasticsearch to reply

- moving of messages from the queues happens in batches. For the Elasticsearch output, the batch of messages is sent through the Bulk API, which makes queue.dequeuebatchsize effectively the bulk size

Results

We started with default Elasticsearch settings. Then we tuned them to leave rsyslog with a more significant slice of the CPU. We monitored the indexing rate with SPM for Elasticsearch. Here are the average results over 20 million indexed events:

- with default Elasticsearch settings, we got 8,000 events per second

- after setting Elasticsearch up more production-like (5 second refresh interval, increased index buffer size, translog thresholds, etc), and the throughput went up to average of 20,000 events per second

- in the end, we went berserk and used in-memory indices, updated the mapping to disable any storing or indexing for any field, to have Elasticsearch do as little work as possible and make room for rsyslog. Got an average of 30,000 events per second. In this scenario, rsyslog was using between 1 and 1.5 of the 4 virtual CPU cores, with tcpflood using 0.5 and Elasticsearch using from 2 to 2.5

Conclusion

20K EPS on a low-end machine with production-like configuration means Elasticsearch is quick at indexing. This is very good for logs, where you typically have lots of messages being generated, compared to how often you search.

If you need some tool to ship your logs to Elasticsearch with minimum overhead, rsyslog version 8 may well be your best bet.

Related posts:

Changelog for 7.6.1 (v7-stable)

Version 7.6.1 [v7.6-stable] 2014-03-13

- added “action.reportSuspension” action parameter This now permits to control handling on a per-action basis rather to the previous “global setting only”.

- “action resumed” message now also specifies module type which makes troubleshooting a bit easier. Note that we cannot output all the config details (like destination etc) as this would require much more elaborate code changes, which we at least do not like to do in the stable version.

- better and more consistent action naming, action queues now always contain the word “queue” after the action name – add support for “tls-less” librelp we now require librelp 1.2.3, as we need the new error code definition See also: https://github.com/rsyslog/

librelp/issues/1 - build system improvements

- autoconf subdir option

- support for newer json-c packages Thanks to Michael Biebl for the patches.

- imjournal enhancements:

- log entries with empty message field are no longer ignored

- invalid facility and severity values are replaced by defaults

- new config parameters to set default facility and severity Thanks to Tomas Heinrich for implementing this

- bugfix: ompipe did resume itself even when it was still in error See: https://github.com/rsyslog/

rsyslog/issues/35 Thanks to github user schplat for reporting - bugfix: “action xxx suspended” did report incorrect error code

- bugfix: ommongodb’s template parameter was mandatory but should have been optional Thanks to Alain for the analysis and the patch.

- bugfix: only partial doc was put into distribution tarball Thanks to Michael Biebl for alerting us. see also: https://github.com/rsyslog/

rsyslog/issues/31 - bugfix: async ruleset did process already-deleted messages Thanks to John Novotny for the patch.